This week, Qualcomm finally announced it had entered into an Amended Definitive Agreement for the NXP acquisition, which was approved by the Qualcomm and NXP Boards of Directors. Of course, that caused an unexpected reaction from Broadcom, but that's not my point. What I couldn't help recalling is the fact that these companies are already the compound of multiple great companies and enterprise cultures, the most recent of which the Freescale merger with NXP, Qualcomm's integration of CSR, and Broadcom's acquisition of Brocade.

Inevitably, in all those mergers and acquisitions there are technology platforms and R&D efforts that are affected by corporate restructuring, consolidation, and overlaps, with consequences that are not always noticeable, but have a profound impact, including on our tiny (in perspective) audio industry. Given the degree of innovation we have seen from NXP and the amount of ongoing audio-related projects and released platforms that it has contributed - many of which also the focus of many important recent efforts by Qualcomm - we cannot help but wonder what the impact of the acquisition will be.

In this conversation, Martyn Humphries explains what lead NXP to develop this family of processors and the vast implications the platform might have not only for the obvious applications in smart speakers, media hubs, soundbars, and all the smart home devices that the company displayed in its massive exhibit in Las Vegas, NV, but also how all audio companies in general can leverage this "convergence of immersive sensory experiences fueled by voice, video and audio demands," to create a new generation of solutions.

In those demonstrations, there were numerous examples of already available products that combine audio, video and machine learning to create connected products that can be controlled via voice command, with the i.MX.8M processor providing all the process technology and computing needs. "We've been in the general-purpose processor business for well over 10 years and are probably one of the most trusted suppliers of applications' processors. We are very much an enabler, a company that wants to be an enabler," Humphries stated. "When we apply that to what we've done with voice, where we started very earlier on our relationship with Amazon Alexa and with Google, we have reference designs with both companies. Many speakers that are launched today use NXP iMX processor solutions. On the smart speaker market, you've got the Insignia Best-Buy brand, the new Sonos One, Onkyo, Panasonic, and a number of different companies that are developing - and we'll see many more in 2018."

"What we did with the iMX processors family was to expand it with the new range of i.MX.8M (M for Media), because when we talked to our customers over the last several years, we realized that first of all voice UI was coming, and the need for smart audio was coming. We knew that music streaming would continue to get stronger and we also knew that the people wanted OTT video. Our customers wanted to bring all that together. Think of a smart STB where we have beautiful 4K HDR video into a beautiful flat panel display, and we want to be able to connect soundbars with 5.1 or 7.1 audio and we want to be able to have a voice system. Some companies told us that they wanted a solution to have it all together. The 8M is able to do that.

"We also wanted to make sure that we could have smart control at home, so we could send voice commands to devices in the home, like a thermostat. With those devices, from some sort of central point, we can now make things happen in the home environment. That's what we've been doing with the 8M family.

"We have a whole range of products that are coming out, and we are introducing versions that do voice only, do voice and music, or do all three. They are all software compatible and based on simple design kits."

audioXpress: In this complex environment for voice-enabled designs, with multiple voice engines, multiple wake-word solutions, and microphone arrays, how does NXP deal with that integration?

Martyn Humphries: Like everybody else, we have to partner with many other vendors, like the microphone guys. Our reference designs are based on the algorithms from Google, Alexa, HomeKit, etc. The ecosystem is all powerful, because all is built on top of the ecosystems and we have to be compliant within those ecosystems.

In the 8M family we have four Cortex A53 processors, and they're configured so that we can independently run Alexa, or Google, or HomeKit on the same chip, at the same time. Using a different processor. Then the user has a chance to differentiate and say if their products will work with any of those ecosystems.

AX: Like Sonos does...

MH: Exactly. The other very important thing we did was to bring down the cost of voice, and bringing it more into the microcontroller world. Right now, of course there's limited things you can do with a microcontroller and voice, just because of the computational power that's required. What we've done with the 8M is that we've put an M4 microcontroller in the SoC, working with the A53's so that we can push the wake word to the microcontroller. What happens is that we can then have situations where we don't need to go out to the cloud, and we can locally wait for the wake-word, with the MCU in very low power state. The A cores are off, and we can wake up the system because the microcontroller has been waiting for that event.

That's different because our competitors haven't yet integrated the microcontroller technology into the product and it's easy for NXP because we are a microcontroller company and a microprocessor company. We've had both technologies for decades.

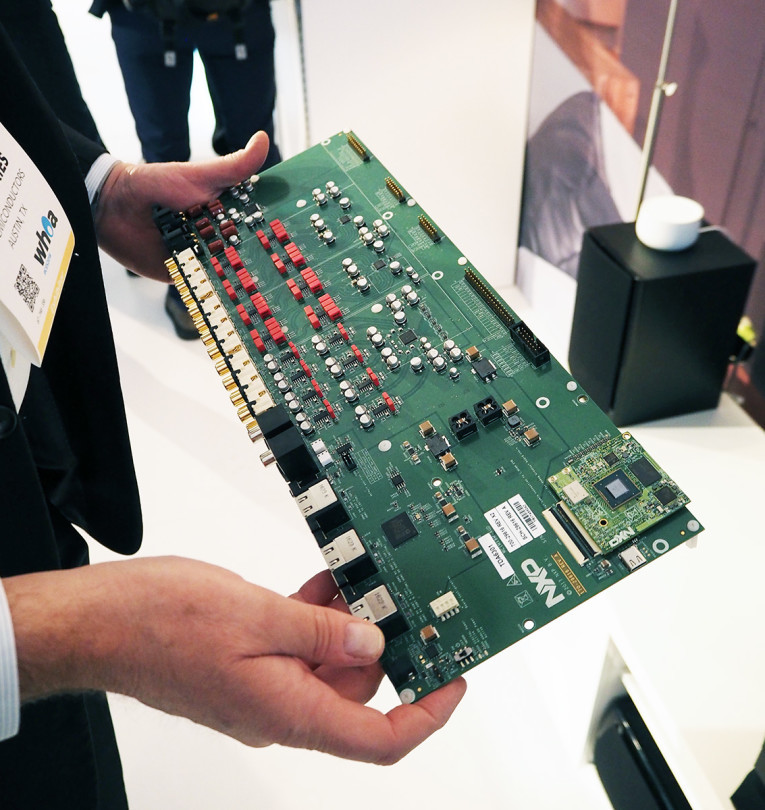

At our booth at CES we showed the 8M running applications where we replaced the DSPs to create an audio amplifier with Dolby Atmos. We don't use any DSPs. And we have all the HDMIs, all the professional I/Os on our audio framework, using a single chip.

AX: What about creating smart acoustic compensation and improving audio quality in "standard" speakers? Can manufacturers leverage these new NXP platforms?

MH: Absolutely, there's been companies exploring applications, which are beyond the ecosystems and want their own proprietary control. Our platform allows those companies to put their own algorithms and we can run it in our environment. In the 8M family, each of these A53 cores can run their separate system. They can have their own localized control of the device. At the same time, they can also run an ecosystem search engine, like Google, Amazon shopping or whatever. We've had companies that we are working with us doing that.

MH: I cannot give out any names, for products which have not been announced, but we are working with companies beyond consumer, in the industrial and the enterprise spaces. We think in the enterprise we will find many applications for this technology as well.

NXP supports all the radio technologies with Bluetooth, ZigBee, sensor technology, and all standards-based technologies. We've brought all those technologies together to operate in an industrial or enterprise environment with voice control.

There's going to be conference room- and videoconferencing-type applications coming.

I think that's an exciting thing, because having this technology going into the workplace, or going into the industry, is exciting. The consumer area is creating the buzz right now, sales of speakers continue to climb significantly, but there are so many more things, like dealing with noise. We have the technology to actually improve the environments and the workplaces that we live in, based on sound. That's the sort of things that we see coming.

AX: In terms of voice applications, we are building the platforms quite fast. But do you think this will evolve to meet consumers' expectations in this domain?

MH: The big companies like Amazon, Google, and Apple basically have been able to build up teams and gather algorithms. They are gathering all the data that's required for learning. That's not something that can be done by one little small company.

I am quite certain that the platforms are going to evolve and learn. The speed that they learn is the question.

What you will also see from NXP is more work on the front end. We want to be able to localize conditioning of the voice assistant, so that we can have everything from the wake word happening without going to the cloud, but also - and that's very important for the 8M family - to make sure that when there is no Internet connection I can still control things on my home and use my local media sources. All the home things have to be controlled locally, without the cloud, and at the same time, local needs to be super low-latency. It should be almost instantaneous.

AX: Independently of the merger talks, are you already cooperating with Qualcomm, for instance in wireless technologies?

MH: Interestingly, before there was any news of a merger with Qualcomm, we were using Wi-Fi from Broadcom, which was Cypress, and we use Wi-Fi from Qualcomm. We have reference designs that have Wi-Fi from any of those companies. We are able to support Qualcomm's Wi-Fi solutions in our platforms, because historically we always have. Obviously, once the merger is finalized there will be closer cooperation, naturally. Today, we are working with all the latest and greatest technologies.

What I can say is that what we're doing is complementary with Qualcomm. We think that together we will have a very strong portfolio. It will be one of the world's strongest portfolios.

There won't be as much overlap as you might think. NXP brought lots of innovation, and Qualcomm is also an innovator. From what we know, we are very complementary and, as we come together we'll get the best of both. There's no glaring disconnect.