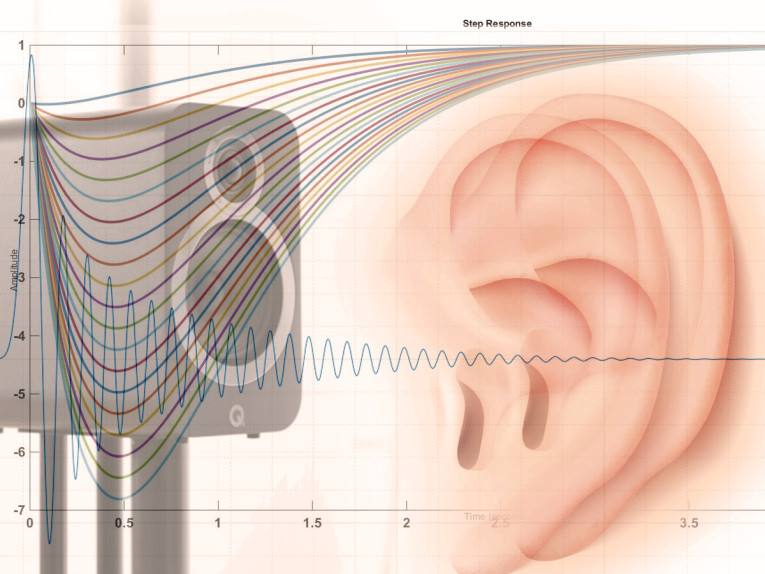

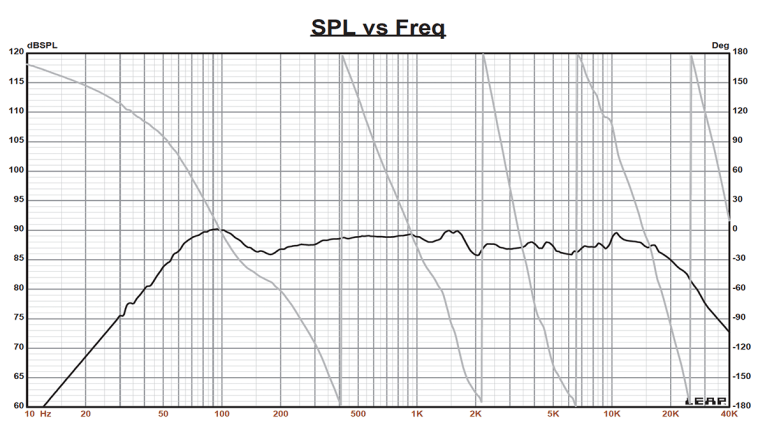

Once this much DSP power was available, it became possible to produce a loudspeaker anechoic response that had a zero phase profile, linear phase if you will, via the use of FIR and IIR filters. For a quick graphic illustration, Figure 1 shows a three-way speaker with a normal phase curve. Figure 2 shows the same speaker with a typical zero phase curve (red line) superimposed on Figure 1. I should also point out that there is a great freeware program available titled “rePhase” (see Figure 3) that makes rendering a loudspeaker phase response to zero as easy as equalizing the amplitude of a speaker, and indeed the GUI looks like a 1/3 octave phase equalizer (available at http://rephase.org).

As a result of the high-powered DSP technology, there are a number of studio monitor manufacturers claiming superior subjective performance of their products due to the application of zero phase and accompanying impulse and group delay corrections. The subjective claims are generally tighter bass, and a dramatically wider soundstage, two obviously positive aspects of loudspeaker performance.

However, at the onset I should point out that this phenomenon, if you want to call it that, only exist so far, at least from my research, in the pro sound studio monitor community, ostensibly because the high-end two-channel market is dominated by passive designs that make this kind of phase correction difficult to impossible. This was a bit of a shock to me, as my only acquaintance with zero phase techniques being used in pro sound had to do with combining speaker arrays, or in room equalization. I remember when Linear Phase speakers came to the surface in the mid-1970s when Technics introduced its SB series with the drivers physically time aligned and set back from the woofer baffle (see Photo 1).

Eventually, everyone seemed to figure out that this created as many problems as it solved, and the kangaroo baffle thing mostly disappeared from public view. There was a lot of confusion about phase, group delay, and acoustic center in those days, and I had not been aware of this being an issue again until the last year.

The implication of using FIR filters to zero out the phase of a loudspeaker to improve its subjective performance seems inherently flawed given all the research done over the years that concluded that the human ear is not very sensitive to phase or group delay. Given this, I decided to get a few other opinions on this subject and sent out emails to a number of individuals whose opinions I greatly respect, asking them what they thought of the concept of zero phase improving subjective performance in loudspeakers. In this email, I simply addressed the subject without my conclusions and listed a number of websites of studio monitor companies touting zero phase profiles in their monitors. What follows is the response of several of these practitioners (two held corporate positions that prevented public response and are not published here). This list included Dr. Floyd Toole, Dr. Wolfgang Klippel, Andrew Jones, and James Croft.

Dr. Floyd Toole

As half of a transfer function, engineers are attracted to getting both amplitude and phase correct (i.e., waveform fidelity). It turns out that, for perfectly logical reasons, humans are not responsive to phase (i.e., waveforms), whether they are Beethoven, step functions, square waves, etc. A lot of highly motivated researchers have investigated this. But, every few years it reemerges as a “forgotten factor” in sound reproduction. Now with DSP, it is happening again.

The fact that transducers are minimum phase devices within their operating frequency range makes getting it right easy. Make it flat and smooth on axis and keep it smooth off axis — make the Spinorama look good and it will sound good. QED. In contrast we are extremely sensitive to amplitude response fluctuations, especially those associated with resonances. But there also is an anomaly — we hear the spectral bump, not the temporal ringing. Recent research has indicated that this is true even at bass frequencies where our intuition would suggest otherwise.

Here is what I say about the topic in the third edition of my book, Sound Reproduction: The Acoustics and Psychoacoustics of

Loudspeakers and Rooms.

4.8 Phase and Polarity — Do We Hear Waveforms? — A very long time ago, as part of my self-education about the hearing system, I learned that the basic transduction process in the inner ear performed as a half-wave rectifier. This alone gives one reason to think that acoustic compressions will be heard as different from acoustic rarefactions. We should be sensitive to details in the acoustic waveform as it generates movement of the eardrum, and thus initiates the hearing process. Based on this simple logic, phase shifts and absolute polarity should be audible phenomena.

Naturally I did some tests, reversing polarities of loudspeakers, and introducing phase shift to distort musical waveforms — listening for big differences. They weren’t there. At least not in the music I was listening to, through the loudspeakers I was using, from the musical sources I employed. Maybe I was simply unable to hear these things. Yes, there were times when I thought I heard things, but they were subtle, and hard to repeat. Changing loudspeakers made huge differences. Changing recording companies or engineers made huge differences. But, the anticipated “dramatic” event of inverting polarity appeared to be missing, in spite of how appealing the idea of waveform integrity is from an engineering perspective. Are they audible or not, and if so, does it matter in real world listening?

4.8.1 The Audibility of Phase Shift and Group Delay — The combination of amplitude vs. frequency (frequency response) and phase vs. frequency (phase response) totally define the linear (amplitude independent) behavior of loudspeakers. The Fourier transform allows this information to be converted into the impulse response and, of course, the reverse can be done. So, there are two equivalent representations of the linear behavior of systems, one in the frequency domain (amplitude and phase) and the other in the time domain (impulse response).

An enormous amount of evidence indicates that listeners are attracted to linear (flat and smooth) amplitude vs. frequency characteristics; more to be shown later. A figure (Figure 7) as detailed in Toole (1986) and the excerpts shown in Figure 5.2 [see p. 114 of Sound Reproduction, 3rd Edition], indicate that listeners showed a clear preference for loudspeakers with smooth and flat frequency responses.

That same Figure 5.2 also shows phase responses for those same loudspeakers. It is difficult to see any reliable relationship to listener preference, except that those with the highest ratings had the smoothest curves, but linearity did not appear to be a factor. Listeners were attracted to loudspeakers with minimal evidence of resonances because resonances show themselves as bumps in frequency response curves and rapid up-down deviations in phase response curves. The most desirable frequency responses were approximations to horizontal straight lines. The corresponding phase responses had no special shape, other than the smoothness. This suggests that we like flat amplitude spectra, we don’t like resonances, but we seem to be insensitive to general phase shift, meaning that waveform fidelity is not a requirement.

If one chooses to design a loudspeaker system having linear phase, there will be only a very limited range of positions in space over which it will apply. This constraint can be accommodated for the direct sound from a loudspeaker, but even a single reflection destroys the relationship.

Therefore, it seems that: (a) because of reflections in the recording environment there is little possibility of phase integrity in the recorded signal; (b) there are challenges in designing loudspeakers that can deliver a signal with phase integrity over a large angular range, and (c) there is no hope of it reaching a listener in a normally reflective room. All is not lost, though, because two ears and a brain seem not to care.

Many investigators over many years attempted to determine whether phase shift mattered to sound quality (e.g., Hansen and Madsen, 1974; Lipshitz et al., 1982; Van Keulen, 1991; Greenfield and Hawksford, 1990). In every case it has been shown that, if it is audible, it is a subtle effect, most easily heard through headphones or in an anechoic chamber, using carefully chosen or contrived signals. There is quite general agreement that with music, reproduced through loudspeakers in normally reflective rooms phase shift is substantially or completely inaudible.

When it has been audible as a difference, when it is switched in and out, it is not clear that listeners had a preference. Others looked at the audibility of group delay: Blauert and Laws (1978), Deer, Bloom and Preis (1985), Bilsen and Kievits (1989), Krauss (1990), Flanagan, Moore, and Stone (2005), Møller, et al. (2007) finding that the detection threshold is in the range 1.6 to 2 ms, and more in reflective spaces. These numbers are not exceeded by normal domestic and monitor loudspeakers.

Lipshitz, Pocock, and Vanderkooy (1982) conclude: “All of the effects described can reasonably be classified as subtle. We are not, in our present state of knowledge, advocating that phase linear transducers are a requirement for high-quality sound reproduction.” Greenfield and Hawksford (1990) observe that phase effects in rooms are “very subtle effects indeed,” and seem mostly to be spatial rather than timbral. As to whether phase corrections are needed, without a phase correct recording process, any listener opinions are of personal preference, not the recognition of “accurate” reproduction.

4.8.2 Phase Shift at Low frequencies; a Special Case — In the recording and reproduction of bass frequencies there is an accumulation of phase shift at low frequencies that arises whenever a high-pass filter characteristic is inserted into the signal path. It happens at the very first step, in the microphone, then in various electronic devices that are used to attenuate unwanted rumbles in the recording environments. More is added in the mixing process, storage systems and in playback devices that simply don’t respond to DC. All are in some way high-pass filtered. One of the most potent phase shifters is the analog tape recorder.

Finally, at the end of all this is the loudspeaker which cannot respond to DC and must be limited in its downward frequency extension. I don’t know if anyone has added up all of the possible contributions, but it must be enormous. Obviously, what we hear at low frequencies is unrecognizably corrupted by phase shift. The question of the moment is, how much of this is contributed by the woofer/subwoofer, is it audible and, if so, can anything practical be done about it? Oh yes, and if so, can we hear it through a room?

Fincham (1985) reported that the contribution of the loudspeaker alone could be heard with specially recorded music and a contrived signal, but that it was “quite subtle.” The author heard this demonstration and can concur. Craven and Gerzon (1992) stated that the phase distortion caused by the high-pass response is audible, even if the cut-off frequency is reduced to 5 Hz. They say it causes the bass to lack “tightness” and become “woolly.” Phase equalization of the bass, they say, subjectively extends the effective bass response by the order of half an octave. Howard (2006) discusses this work, and the abandoned product that was to come from it. There was disagreement about how audible the effect was. Howard describes some work of his own, measurements and a casual listening test. With a custom recording of a bass guitar, having minimal inherent phase shift, he felt that there was a useful difference when the loudspeaker phase shift was compensated for. None of these exercises reported controlled, double-blind listening tests, which would have delivered a statistical perspective on what might or might not be audible, and whether a preference for one condition or the other was indicated.

The upshot of all of this is that, even when the program material might allow for an effect to be heard, there are differences of opinion. It all assumes that the program material is pristine, which it patently is not, nor is it likely to be in the foreseeable future. It also assumes that the listening room is a neutral factor, which, as Chapter 8 in my book explains, it certainly is not. However, if it can be arranged that these other factors can be brought under control, the technology exists to solve the residual loudspeaker issue.”

Dr. Wolfgang Klippel

The auditory filters separate the signals within one critical band width (approximately 1/3 octave at higher frequencies) and the following envelop detection is sensitive for any phase shift between the components. This results in a change of the perceived roughness and fluctuation. For the same reason amplitude modulation (a bass signal f1< 100 Hz modulates a high frequency signal f2> 300 Hz) generates much more differences than phase modulation, aka Doppler). There are a lot of experiments on this (e.g., Zwicker). Thus the variation of the group delay within one critical band width is the critical point (usually 1-2 ms).

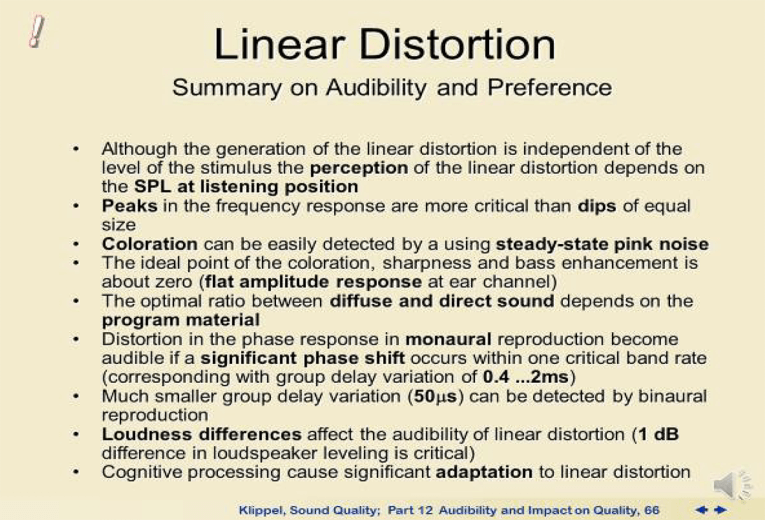

However, the perceived changes used by phase are small and have a low impact on sound quality. The effects of the amplitude caused by minimal-phase properties are dominant. However, if you have a multi-channel system (stereo and more) a phase difference of 50 ms will change the sound image (directivity). Figure 4 shows a summary from my lecture about sound quality.

Andrew Jones

The question is framed around the concept of phase linearity with the aid of DSP, but it’s useful to step back and look first at the attempts at phase linearity in passive speakers. Early attempts were based around the idea of first-order crossovers, erroneously forgetting the band limitations of the drivers themselves. Later work understood that the overall response had to be first order, which meant that the drivers had to have extraordinary bandwidth in order that the overall responses could add correctly without inter-unit phase errors. This approach was combined with acoustic center matching using either sloped baffles or stepped baffles. Further work resulted in higher-order asymmetric crossovers, or delay enhanced crossovers, but these were difficult to implement passively.

One intriguing approach was pioneered by Bang & Olufsen (B&O), the “filler driver” concept. This was basically a conventional two-way speaker with a filler driver that corrected the phase error. Ingenious but just as flawed as the other approaches. Why flawed? Because all implementations of phase linear speakers other than those using concentric drivers achieve their phase linearity on only one axis. Every other angle, both horizontal and vertical, must introduce added differential delay and so eliminate phase coherency.

Additional limitations are the wide bandwidth required from the drivers causing power and linearity issues, and wide overlap at the crossover point resulting in large amplitude response irregularities off-axis and poor power response.

So do any of these implementations result in better sound? It is next to impossible to determine this and link it to the limited phase linearity. One could argue that most aspects that have been determined to result in improved performance in loudspeakers are made worse by these measures.

Yet nearly all research on the topic of phase linearity has relegated any audibility to secondary or tertiary prominence in perceived sound quality ranking. This is not good news set against the negative impact on established techniques that maximize sound quality. What if we could get around all of this with the use of DSP? What if we could design a speaker to satisfy all conventional parameters then fix the phase errors with DSP ahead of the system? Or use the power of DSP to develop improved crossovers that have better amplitude as well as phase responses?

This is what is being promised with some newer DSP speakers. However, it can never fix the primary problem of non-coincident drivers giving differing phase responses at different angles. Nor can it correct the phase errors without significant overall latency.

James Croft

I feel these types of phase corrections can have value, but my view of what they are good for is probably different than others. I found that the audible effects of correlated phase correction (assuming that before and after phase correction left and right channels don’t exhibit a phase differential between them) fell into a two categories:

• Small audible differences heard on anechoic conditions, but not in standard, echoic listening environments (Editor’s Note: James Croft performed listening tests of a zero phase speaker in an anechoic chamber, where improvements were very, very subtle, and not hearable in a listening room.)

• Audible differences heard if there were corrections for large phase errors; ones that are larger than what are found in most any good loudspeaker.

So, I found the best use for these phase corrective measures to be, not so much for refining standard loudspeakers, but to provide a tool to develop increased performance in other, more audible categories. My two primary categories would be:

1) To correct phase response to correct amplitude anomalies where there are phase errors that cause amplitude anomalies

2) And probably more importantly, to allow phase correction of systems that are “purposely” designed to have large phase errors in exchange for some other gain in performance (e.g., improved large signal capability).

One example of Category #2 that was found to be significant was working with tapped horns. If you construct a dual-tapped horn where one of the tapped horns was tuned to 20 Hz and the second tapped horn was tuned to about 26 Hz, causing an excursion minimum in the second tapped horn where the first tapped horn had an excursion maximum. If you run these in parallel, and EQ shape the response of each to take advantage of keeping their X-max overload to a minimum, you can “potentially” significantly increase total system output for the same amount of driver size and enclosure volume. I say “potentially” because their output is compromised by sharp phase changes around the two tuning frequencies, such that the systems partially cancel each other instead of being additive.

However, with phase correction, the two systems can be brought in-phase with each other and exhibit the full large signal amplitude benefits of zero phase differential, and full amplitude summation. So, in this case, the benefit has nothing to do with improving phase errors for the purpose of sound “quality”, but instead using the improved phase correction for improved sound “quantity.”

The system just happens to be phase corrected, as a secondary effect, but that aspect of the improvement will be audibly insignificant. But the large signal improvement is approximately 7 dB. I’ll take that over straining to hear a phase change any day!

I found the same effect when combining a fourth-order single tuned band-pass in parallel with a sixth-order dual tuned band-pass woofer, and integrating them as a hybrid system. Without phase correction there is actually an amplitude loss, but with phase correction one can achieve 5 dB to 8 dB of increased large signal capability. There are more examples, but these are the ways that this type of phase correction can have “real world” payoffs.

In Conclusion

As you can surmise from these remarks, everyone is pretty much on the same page. Phase is just not that hearable whether unaltered or rendered to a zero degree position. Zero phase may make very subtle improvements if you are listening in an anechoic environment, but whatever minor improvements you may have heard are lost in a listening environment where phase is scattered by room reflections.

As Croft pointed out, there are legitimate uses of zero phase that provide substantial advantages, such as in the case of the Trinnov room correction device found in recordings studios, cinemas, home theaters, and high-end two-channel home applications. However, the Trinnov is invoking zero phase in a room environment, which aids in the summation of multiple loudspeakers in a listening space. That however, is very different from offering zero phase embellishments to the anechoic response of a loudspeaker. VC

Resources

F. E. Toole, Sound Reproduction: The Acoustics and Psychoacoustics of Loudspeakers and Rooms, Routledge, 2017.

This article was originally published in Voice Coil, October 2019.