Since the early days of BASIC machines and before the Mac and the PC, I was fascinated with personal computers and the many promises they held. Over the years I realized that those machines remained closer to the typewriter than to the actual vision of a computer that was able to supplement and augment human abilities. Yes, we could code; yes, computers can perform calculations; yes, I could design complete magazines on a computer, but it all depends exclusively of human input. Even with today's personal computers that promise of augmenting our own capabilities remains on hold.

Yes, smartphones transformed "computers" into actual useful things that are personal and basically made our lives easier. Outside of the entertainment aspects, today's "computers" that we carry everywhere have introduced many "assistance" features but with very limited capabilities. For a while, voice assistants gave us the (wrong) impression that machines could actually do something for us, but early voice interfaces only proved that given a misinterpretation on human input, the outcome could be far from the intended.

Then suddenly ChatGPT was unveiled and introduced us to text-based "prompt AI," while Stable Diffusion and Hall-E stunned the world with amazing image generation capabilities, and the longstanding promise of artificial intelligence was projected to a whole new level thanks to large language models and generative AI. The big AI fever that followed saw the usual suspects rushing to say "me too," and the impression was created that these primitive interaction tools powered by generative AI would change everything. As we are reaching the peak of inflated expectations of the Gartner hype cycle, we are all realizing the limitations of "prompt engineering" and it becomes clear that a larger vision for AI was not yet in place.

Then, on Monday, June 10, as scheduled, Apple streamed its Worldwide Developers Conference (WWDC) 2024 keynote, and all the perspectives around AI and computing seem to have changed forever.

I have no doubt that this was another historic moment for the Cupertino, CA, company and a true milestone in the history of computing and consumer technology - the one moment that made me feel excited about using computers in the future, and when suddenly AI started to make sense.

Aside the usual clumsiness of these Apple keynote events and the painful listing of new irrelevant features and questionable wardrobe choices from the selected Apple presenters - made even more shocking due to the extraordinary image quality of the web streaming (which was streamed with Spatial Audio, by the way), this is an event that I recommend everyone should watch (you can jump to the second half, starting with the macOS updates).

The content of this June 10 keynote was a breakthrough moment in particular for human-machine interfaces, including voice interactions. It showed how these new AI accelerators will help us increase the pace of innovation, from coding to making real-time audio processing truly adaptive. Because, unlike the awkward prompt-AI interfaces, this presentation showed us how AI embedded in our existing devices can become truly useful, augmenting our own capabilities.

It Was Already There

I have been experimenting with the possibilities of new AI tools for a while. I probably had a clear preview of the exciting possibilities because of all the demos that I experienced at CES and many other trade shows. And many of those have been focusing on machine learning and AI for a while now - including on connected edge devices or purely on-device. And the exciting possibilities of what I witnessed is what has allowed me to forecast the exciting features for hearables.

Like all journalists, I have long been following voice-to-text transcription engines for years, and audioXpress has extensively documented the progress of voice interfaces, voice assistants, and natural language understanding engines. Since generative AI was unleashed in actual services, I've tested them all to see how I could actually make use if it (not very successfully, like everyone else), I embraced the useful image generation and editing capabilities that were added to Adobe Photoshop (with very basic UI add-ons), and I have explored many specific online engines that allow incredible image enhancing capabilities. Things that could be of actual use for my job.

Most recently, I embraced the power of using generative AI for search, with Microsoft Copilot now available everywhere, including in the superb Skype app that Microsoft keeps making better every week and of which all our editorial team depends for the work we do daily. (As an aside, I fail to understand how Microsoft puts so much effort into Teams, which is an awful tool, and a resource hog on any OS, while sitting on a such a powerful software such as Skype, available for all operating systems, desktop and mobile, and free. Skype does everything that Teams, Zoom, and all the others do, only better and faster. If you forgot about Skype, just download it again here - your old account will likely still work - you will be amazed at how good it is.)

As I was saying, Copilot is now integrated directly into Skype and other Microsoft applications, and is really useful. Because it provides both the features of a prompt assistant and search engine, providing the sources for fact-checking. Microsoft Copilot now is integrated into all Microsoft 365 applications and Bing (which means that DuckDuckGo search also benefits). Copilot is based on a large language model, Microsoft's Prometheus, which is built upon OpenAI's GPT-4 and has been fine-tuned using supervised and reinforcement learning techniques. With Copilot, we can have a glimpse of how AI prompting can become useful to everyone. But that's it.

It was just the beginning. And let's not address all the concerns - reliability, privacy, cost, and others - that all these cloud-based services involve.

All along, I have been watching this space and trying to foresee how Apple would react to the massive public perception and the hype around AI. Following closely their hardware and software evolution, all the signs were evident in all Apple platforms. From any iPhone and Mac to AirPods Pro, Apple has long been adding the power to enable running AI on-device. The latest Apple Silicon A17 Pro, M3, M3 Pro, and M3 Max have an enhanced Neural Engine to accelerate machine learning (ML) models, which is up to 60% faster than in the (still recent) M1 family of chips, making AI/ML workflows even faster while keeping data on device to preserve privacy. And everything, from the multicore CPU and GPU and unified memory, make these processors able to run powerful AI image and audio processing tools.

Apple Intelligence

This year’s keynote included an extended segment focused on what Apple called Apple Intelligence (it worked as intended for the stock market at least). In their words, "the personal intelligence system that combines the power of generative models with users’ personal context — at the core of iPhone, iPad, and Mac to deliver intelligence that’s incredibly useful and relevant."

Apple Intelligence will be implemented in this year's and future devices, and will arrive with iOS 18, iPadOS 18, macOS Sequoia, and watchOS 11 updates. Everywhere in the demonstrations of the new features, we could see clear signs of AI doing its thing in the background. The continuity control of an iPhone directly on the Mac, and the possibility to interact with Siri (which will remain the virtual assistant, now powered by the latest AI engines) via text, apart from voice, were two very big highlights for me personally. But all the updates are full of great features that wouldn't be that great if it wasn't for AI.

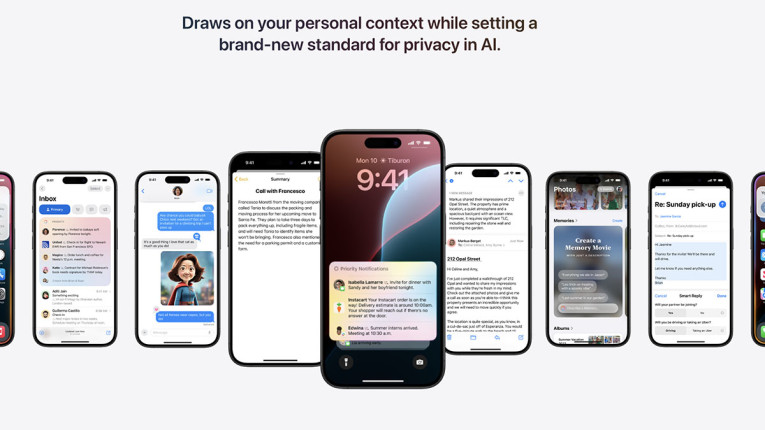

"Built with privacy from the ground up, Apple Intelligence is deeply integrated into macOS Sequoia, iOS 18, and iPadOS 18," said Craig Federighi, Apple’s senior vice president of Software Engineering. Given the tight hardware and software integration that characterizes Apple's approach, this means that AI will be running on-device in Macs, iPhones, and iPads. At the operating system level, these devices will be able to use the power of generative models with personal context to make all interactions and apps useful and relevant. As Apple explained, AI is able "to understand and create language and images, take action across apps, and draw from personal context, simplifying and accelerating everyday tasks."

And that starts with Siri taking a major step forward to become more natural, contextually relevant, and personal. Of course, we will need to know how much better Apple will be able to make basic voice recognition work - and in how many languages - since that always depends on the hardware and voice front-end to start with. But in the preview we got, Apple promised the possibility to allow users to type to Siri, and "switch between text and voice to communicate with Siri in whatever way feels right for the moment." That is a great move, in my opinion - likely motivated by all the hype around prompt-AI, but something I have long thought should be possible.

Of particular interest for me was to see how, powered by Apple Intelligence, Siri will become more deeply integrated into the system experience. "With richer language-understanding capabilities, Siri is more natural, more contextually relevant, and more personal, with the ability to simplify and accelerate everyday tasks. It can follow along if users stumble over words and maintain context from one request to the next." And with (multimodal) onscreen awareness, Siri makes it easy to add an address received in a message directly to the right contact card, without the need to open the Contacts app.

The use cases demonstrated in the keynote are just a glimpse of the huge potential of embedded AI, as exemplified by the new systemwide Writing Tools that allow us to rewrite, proofread, and summarize text in emails, notes, word processors, and third-party apps. Those tools are already present in today's Apple software, but they need to be constantly controlled and monitored by the user to ensure they are useful. Adding deep understanding of language and the contextual ability to quickly organize notes and emails regarding an upcoming trip, is something that we all wished we could have. The ability to record, transcribe, and summarize audio directly on-device is another example of how AI could become pervasive.

And to allow users to benefit from the power of large generative models running on the cloud, Apple presented an extraordinary approach, described in detail in the new Private Cloud Compute initiative. As far as I could read from the level of praise and the positive reactions among the AI and security expert communities, this establishes a new standard for privacy in AI that will likely be emulated. As Apple summarizes, Private Cloud Compute offers "the ability to flex and scale computational capacity between on-device processing and larger, server-based models that run on dedicated Apple silicon servers. When requests are routed to Private Cloud Compute, data is not stored or made accessible to Apple and is only used to fulfill the user’s requests, and independent experts can verify this privacy promise."

Apple also describes what it considers the best way to implement AI in their products like this: "Apple Intelligence is comprised of multiple highly-capable generative models that are specialized for our users’ everyday tasks, and can adapt on the fly for their current activity. The foundation models built into Apple Intelligence have been fine-tuned for user experiences such as writing and refining text, prioritizing and summarizing notifications, creating playful images for conversations with family and friends, and taking in-app actions to simplify interactions across apps."

It's not only what Apple explained about AI in the keynote. At the company's developers’ conference there are many more sessions available that clearly show how far this effort goes. And the documentation that was published provides all the possible details at this stage, showing that Apple has been already training its own models — a ~3 billion parameter on-device language model, and a larger server-based language model available with Private Cloud Compute and running on Apple silicon servers. "These two foundation models are part of a larger family of generative models created by Apple to support users and developers," it states.

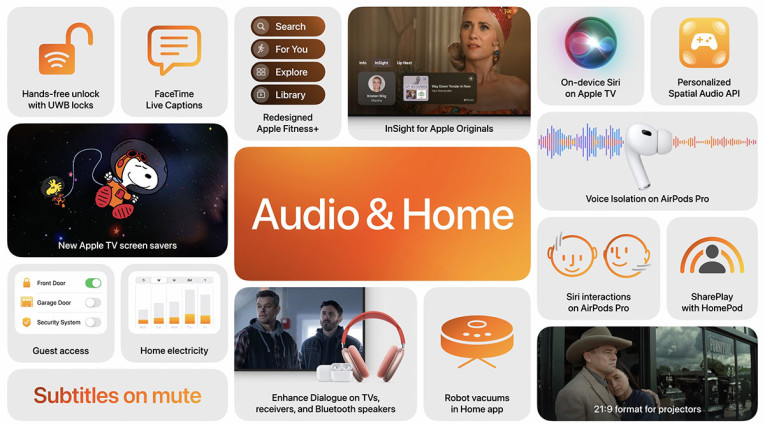

Audio Intelligence

On the audio front, because of all the pressure of the Apple Intelligence message, there was no time for new product announcements and much detail, but again the signs of AI were everywhere. In another upcoming free firmware update for the AirPods Pro coming this fall, Siri Interactions will allow users to privately respond to Siri questions with a simple head nod for yes or shake for no. Enabled by machine learning on the H2 chip, these Siri Interactions allow users to answer or dismiss calls, interact with messages, manage notifications — all without speaking.

This is obviously not new, Bragi offers that feature as part of its platform, but it is obviously dependent on the motion sensor available on the headphones/earbuds and the external voice assistant. This, together with the fragmentation and limited capabilities of Android-based products - where the voice assistants are forced to reside for mobile applications - has restricted its use. The Klipsch ANC earbuds were the first to feature support for head "nod" recognition as far as I could tell.

The most obvious use of AI that Apple showcased at WWDC 2024 was in the new Voice Isolation feature to improve the call quality under very difficult circumstances. The feature allows the AirPods Pro to completely extract only the user's voice in loud or windy environments. Not simply noise reduction, but voice separation. I had similar demonstrations of those features at CES 2024, including a very effective demonstration by Waves that included other advanced AI features. In the AirPods Pro, Voice Isolation is done with machine learning, running on the H2 chip and the paired iPhone, iPad, or Mac to isolate and enhance voice quality while removing the background noise for the listener.

These new features give us a glimpse of what's to come in AI-powered audio processing and audio features from Apple. Of course, we can expect at least a whole new generation of devices to fully enable the basic Apple Intelligence features. But at least now we know why the new generation AirPods Pro are taking so long to be announced - and why Apple has been hiring so many audio and DSP engineers.

More importantly, we now have a better understanding of how AI can become really useful and of the challenges that lie ahead to make this truly effective and pervasive. As always, Apple gets the edge on this (literally) due to its ability to start from the hardware and OS and expand to the whole ecosystem.

But it also sends a clear message that it is really important to invest in the system-on-chips, and system-on-modules that will make on-device AI really work. And for earbuds and hearables, the processors and chipsets that will be needed for AI-based adaptive processing. I'm not surprised that some leading companies in this space decided to call it quits. aX

This article was originally published in The Audio Voice newsletter, (#472), June 13, 2024.