As audio manufacturers are now realizing, voice recognition and Voice Personal Assistants will be essential forms of interaction with smart devices, wearables, and hearables, and will play a key role in the evolution of consumer electronics. But as the industry is also starting to understand, voice interaction with users requires not only natural language processing but also needs to evolve to some degree of artificial intelligence (AI). Voice recognition development platforms for consumer products are starting to appear from service providers, but AI integration poses completely new challenges.

IBM Watson represents a new era in computing called cognitive computing, where systems understand the world in a way more similar to humans: through senses, learning, and experience. Watson continuously learns from previous interactions, gaining in value and knowledge over time. With the help of Watson, organizations are harnessing the power of cognitive computing to transform industries and solve important challenges. As part of IBM’s strategy to accelerate the growth of cognitive computing, Watson is open to the world, allowing a growing community of developers, students, entrepreneurs and tech enthusiasts to easily tap into the most advanced and diverse cognitive computing platform available today.

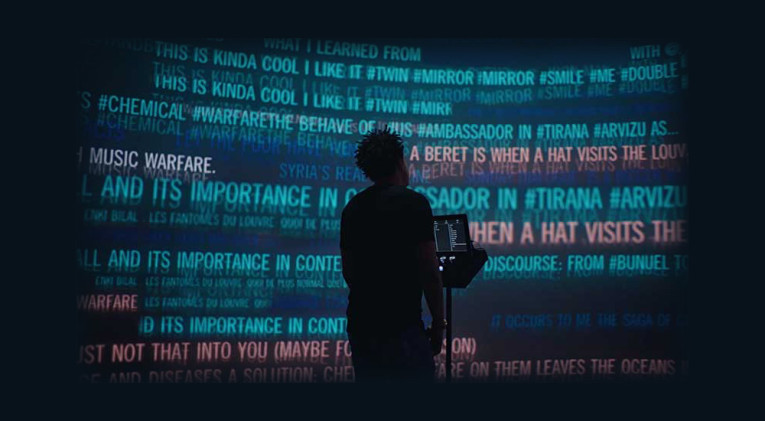

Project Intu simplifies the process for developers wanting to create cognitive experiences in various form factors such as spaces, avatars, robots or other IoT devices, and it extends cognitive technology into the physical world. The platform enables devices to interact more naturally with users, triggering different emotions and behaviors and creating more meaningful and immersive experience for users. The project, in its experimental form, is now accessible via the Watson Developer Cloud and also available on Intu Gateway and GitHub.

Developers can simplify and integrate Watson services, such as Conversation, Language and Visual Recognition, with the capabilities of the “device” to, in essence, act out the interaction with the user. Instead of a developer needing to program each individual movement of a device or avatar, Project Intu makes it easy to combine movements that are appropriate for performing specific tasks like assisting a customer in a retail setting or greeting a visitor in a hotel in a way that is natural for the end user.

Typically, developers must make architectural decisions about how to integrate different cognitive services into an end-user experience – such as what actions the systems will take and what will trigger a device’s particular functionality. Project Intu offers developers a ready-made environment on which to build cognitive experiences running on a wide variety of operating systems – from Raspberry PI to MacOS, Windows to Linux machines, to name a few. As an example, IBM has worked with Nexmo, the Vonage API platform, to demonstrate the ways Intu can be integrated with both Watson and third-party APIs to bring an additional dimension to cognitive interactions via voice-enabled experiences using Nexmo's Voice API’s support of websockets.

“IBM is taking cognitive technology beyond a physical technology interface like a smartphone or a robot toward an even more natural form of human and machine interaction,” says Rob High, IBM Fellow, VP and CTO, IBM Watson. “Project Intu allows users to build embodied systems that reason, learn and interact with humans to create a presence with the people that use them – these cognitive-enabled avatars and devices could transform industries like retail, elder care, and industrial and social robotics.”

The growth of cognitive-enabled applications is sharply accelerating and IBM believes that developer teams will increasingly include Cognitive/AI functionalities in its applications and services. Project Intu is a continuation of IBM’s work in the field of embodied cognition, drawing on advances from IBM Research, as well as the application and use of cognitive and IoT technologies. Making Project Intu available to developers as an experimental offering to experiment with and provide feedback will serve as the basis for further refinements as it moves toward beta.

During the recent IBM Watson Developer Conference, in San Francisco, in addition to a variety of existing academic partnerships, IBM also announced a partnership with Topcoder, a global software development community comprised of more than one million designers, developers, data scientists, and competitive programmers, to advance learning opportunities for cognitive developers who are looking to harness the power of Watson to create the next generation of artificial intelligence apps, APIs, and solutions. This partnership allows businesses to gain access to an increased talent pool of developers with experience in cognitive computing and Watson, through the Topcoder Marketplace.

“The potential of Watson to transform the way we work and live is unlimited. The artificial intelligence revolution presents developers, entrepreneurs and mid-career programmers with an opportunity to create innovation at a level we’ve never seen before," says Willie Tejada, IBM Chief Developer Advocate.

ibm.com/Watson