Adam Levenson: You’ve been in pioneering roles since you started in automotive, and now you’re working on groundbreaking framework technology at DSP Concepts. How did you get here? Can you share some highlights from the past, let’s say, 40 years?

John Whitecar: I always wanted to be in audio. My education is in acoustics and signal processing, so that’s what I studied, and I was on the fence between music and technology. My teacher said, “You’ll never make money in music.” But I had an interest in concert halls, reproduction systems, environments, and how it translates to the experience for the listener. How does that all work together. And when I look back through my career, everything I’ve been in has been about improving perceptual quality, whether it’s speech quality, telephony, playback, or radio. I always asked, “How do you get the perceptual quality of the system as best as it can be?”

Levenson: I know you were in the telecom industry for the first few years of your career, in speech technology. What was your first gig in automotive?

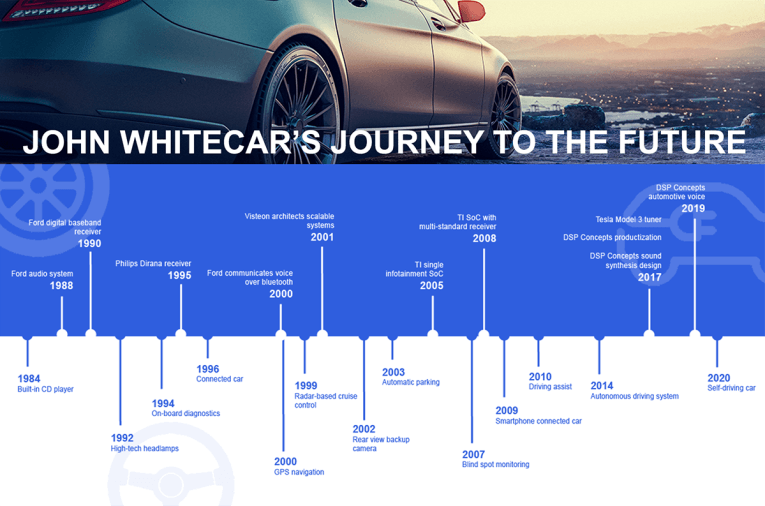

Whitecar: I went to Ford in 1988 to work in audio system design. I designed sound systems for the Explorer—it was one of the first JBL branded systems. I did acoustic testing and helped set up their lab. So that was all about how do we create really high-end sound systems in standard mass-market vehicles. It was Explorers, T-birds, and Town Cars.

I had studied DSP previously, and then we started looking at “could we digitize all this?” To equalize cars back in the early days, you had an analog amplifier and you had to fit resistors into these circuits to get matching curves. It was weeks of effort. So I said, we can cut this down to days if we just digitize it and then take the transfer function and create the inverse transfer function. And so we invented our own tuning tools. This was the 1990s so we had 40MHz processors, and we had to get everything to crunch, and it was all assembly code. So we did the very first OEM production digital amplifier at Ford. Nobody knows about this stuff. It was all internal at Ford and they didn’t advertise it—it just worked.

And then I moved into digitization of radios, and I said OK, we can take this technology into receivers. So we did a ground-up design with Texas Instruments (TI) A-to-Ds [analog to digitals] and DSPs, and came up with a whole digital signal processing baseband receiver design, and followed up with all of the audio signal playback designs. And then we found out that Phillips Semiconductor (now NXP) was off on its own doing this, and I went in and asked if we could jump on. And so we were the only non-Philips customer that was allowed to write code on their processor. So, we rewrote all the stuff that we had done, and we augmented it with the hardware capabilities that they had. Then we did a custom chip with them where we took it out even closer to the RF. So now we’re digitizing right after the down converter, and then we can do all kinds of things from enhanced multipath, noise blanking, and more. The end goal was improving the perceived quality of the audio from the broadcaster.

During this era, the only source of audio in the car was the cassette deck and AM/FM broadcast. So it was all about how do we improve the perceived quality of the audio coming through the air, through the car speakers. I spent all kinds of time doing studies to determine whether one approach sounds better than another using group studies. We had a binaural setup where we could pull people in and do double-blind testing. We were pretty methodical about the whole thing. So we could make trade-offs based on the preferences coming out of the group testing. And so we did a lot of work, not only just basic down-conversion of the signals, but also on the back-end adaptation to the reception environment. And then Philips came out with Dirana, the automotive audio DSP. They moved the design to the next level with the latest Sigma Delta A-to-D technology and reduced cost. Dirana has been around for more than 20 years now.

Levenson: So it was around this time that this digitization approach became available to the wider industry.

Whitecar: Yes, and so then I moved over to Visteon and we got into the whole infotainment side. When Visteon made the decision to sell to other OEMs beyond Ford, we were looking at how we develop architectures for scalable systems. So we had to have platforms. We developed platform architectures, looked at SoC [System on Chip] development and figured out what the best approach was for each class of product, and we came up with a collection of architectures for different classes of products, and, of course, we named them after composers. I did that for quite a while, driving the whole infotainment architecture, what SoC we should go after, and how do we bring new DSP technology in.

We were doing some of the very first Bluetooth designs before there were any standards. It was the late 1990s—it was the Wild West. And then later on, the Bluetooth standard caught up, and came up with standard profiles for automotive. We had to develop the whole criteria for good voice and echo performance in a car. So we developed a series of test metrics around that, and came up with a number of corner cases for what kind of noise is encountered when we drive under certain circumstances, taking into account how that noise translates over the cellular network. We spent a lot of time on that front, and then we got business with Nissan and BMW.

Levenson: All of these trends must have been leading up to the first in-vehicle infotainment systems.

Whitecar: Infotainment had its inception in the late 1980s with a display and touchscreen, but functionality was quite limited. In the 1990s OEMs were pulling together designs, but they were hardware-centric, requiring multiple ECUs. So, at this time Texas Instruments was looking for someone to define SoC architectures for the automotive market to bring system cost down by minimizing ECUs and the number of chips required to build a system. The new thing at the time was encoded audio, and the focus was creating MP3 decoders. That was the genesis of the TI Infotainment business. A whole DSP was used to perform an MP3 decode. So, I joined TI, and we pulled together requirements for a single SoC infotainment system.

Bringing together color display, graphics, voice recognition, navigation, audio, radio, and Bluetooth connectivity into a single chip was a challenging concept at that time. It was the first time anyone had done that. We designed and pushed it out to market. The thinking, which was a bit new at TI, was to build what the market needed. Let’s build this thing and they will come. They were very nervous about it, but we sold a ton of them. It turned into a $300 million annual business and we were the leaders.

By the time we got to Jacinto 4 and 5 SoCs, we had taken over much of the high-end infotainment business. And that’s when Nvidia came in with very high-end graphics capabilities. We weren’t really willing to go down that path because most of the fundamental chipsets came from the wireless and tablet market. Nvidia came from the gaming world with high-resolution displays and high-end graphics. TI decided not to go down that path so that’s when Jacinto 6 kind of tapped out. Plenty of horsepower, but the graphics capabilities were limited. So Jacinto 6 became the second SoC with Nvidia graphics sitting on top of it.

Levenson: So you were at TI for more than a decade. What happened next?

Whitecar: Tesla called. In 2015 they needed someone to lead the broadcast radio roadmap and develop a best-in-class receiver to match the brand expectations. So I said, “Yeah, I can do this.” So, we designed a state-of-the-art six-tuner radio system that was full diversity in every mode, and could seamlessly blend audio across bands. So that if you were driving in Europe and listening to a DAB station, and you drove out of DAB range, it would seamlessly blend to the FM station that was on the same channel. You could drive around the region and never have to touch the dial. That was pretty complex because it was a control system as well as a lot of signal processing to align the signals and make sure that they blended seamlessly. While at Tesla I met Chin Beckmann during orchestra rehearsals.

Levenson: Chin Beckmann, CEO and co-founder of DSP Concepts and a concert pianist!

Whitecar: Yes, she was the pianist. I was the tuba player. It was 2016. She knew I was working at Tesla, and we would talk during intermissions or during breaks at rehearsals, and she would tell me about her challenges in starting DSP Concepts. I would give her my advice, like “here’s how you have to talk to the automotive OEMs,” “here’s how you have to talk to the Tier Ones,” “this is how you message to them.” So finally she said, “Why don’t you just come to work for us?” So I’m here, and the rest is history.

Levenson: Give us some insight into your role at DSP Concepts.

Whitecar: I’m the automotive product manager, responsible for setting the technology road maps, product road maps, understanding the market, talking with customers, and finding out where they’re headed. So I’m looking at RFQs and requirements and figuring out what kind of product features customers are looking for, and then putting together a technology and product road map that aligns with where the market is headed. And so, understanding audio features, understanding the voice features, understanding things like playback features, and even other things like safety-related audio and even how audio applies to autonomous vehicles and autonomous driving situations.

Levenson: From your perspective, how has the automotive business at DSP Concepts changed since you started? Obviously, it has grown.

Whitecar: Yes, it’s grown. At the starting point we basically created playback systems for customers, and we tuned them. It was all bespoke, highly customized. It was more like a consulting services business. Then we built out the tuning tools to help us to tune faster. In fact, one of the reasons that Chin brought me on was to help productize things, to really help scale. To go from being a great services company to packaging things up and productizing them and to create entities that salespeople can sell and that our support groups could more easily support. It was about 2017 when we made that pivot, after DSP Concepts got the A-round funding.

So Paul Beckmann (DSP Concepts CTO and co-founder) and I sat down, and we really started carving out what’s needed for automotive. We started looking at how we could take some of the voice technology that DSP Concepts perfected for consumer and enterprise and bring it into automotive. It was still early for the market in automotive voice assistants. There weren’t any Alexa Auto products yet, for example. And we said, “We know Alexa, we know Amazon, we know how this stuff works. Let’s start promoting that,” and so we started getting some bites, especially with the EVs. And that’s where it started. And then the OEMs were like, while you’re here, why don’t you own the mics and do the telephony. So then we had playback, voice control, and telephony. That’s how it started with Lucid and Rivian.

Levenson: When you think back on your vast experience, and then look at where we are now in the industry, what are the main things that have evolved?

Whitecar: So to me, there are four things that have really moved the needle. First, sensor costs have come down. Microphones used to be pretty clunky ECM modules, expensive, and you could afford to put one in the car. And then later it was like, I could put these little PDM mics all over the car. They’re twenty, thirty cents, really inexpensive. Now microphone sensors are ubiquitous in cars. The other one is compute power. Compute power has exploded with these GHz [gigahertz] plus octa-core devices and quad-core DSPs. The third one is the whole EV movement and autonomous driving that has forced a totally different usage model. The car is now a moving theater. It’s ultimately a different use model when we get to full autonomy and ride sharing.

Thinking back, when I was doing audio at Ford back around 1988 and then all the way to about 2005, the technologies didn’t change much. In 17 years and didn’t change much. What we put in Dirana or in the car DSP was sufficient.

Six-channel system, 5.1, add a woofer, take away a woofer. That was really the only choice. Should I put a subwoofer or not. It was pretty simple. Dolby was trying to get in with Pro Logic, failed. DVD audio was trying to get in, failed. Super CD, failed. All of these high-end things failed because they couldn’t get the customers excited about it. And so anything that was interesting in audio just didn’t move because there was no inflection point that could really change the model.

Levenson: So what was that inflection point?

Whitecar: The inflection point was that voice became important. That’s the fourth thing that has really moved the needle. Now with voice, the user is really interacting with the vehicle. At Visteon, we had a Jaguar with voice control in 1998. All it did was set the AC and turn on the radio because that’s all you could do. It wasn’t connected to the internet and it was kind of clunky, and it only worked for American native speakers. If you had any foreign accent, it wouldn’t work. It was not a success.

But now, the speech technologies are fantastic. So the demand for voice technologies came out of the fact that it actually works now. Now OEMs have microphones all over the place, and that introduces all kinds of other use cases. I want to make a phone call, that’s the obvious thing. I want to be able to have a conference call with everybody in the car. I want to go ask for the weather. I want to get a route. So now there’s an explosion of the back-end ecosystem and use cases. That’s huge. Now it’s a question of which back-end do I want to use? I want to get music from Spotify. I want to get the weather from Amazon. I want to get my route from Google. I want to change the climate control, so now I just want to do that locally. So now the problem is really about how do I deal with multiple back-ends, and that’s changed just in the last five years. From almost no connectivity and no real good use cases to now.

Levenson: And you said that the EV movement is another thread that moved the needle.

Whitecar: So the electric vehicles brought new problems: road noise. We don’t have engine noise anymore to mask the road noise. So now everybody hears everything, and it’s all a trade-off of how much damping material does the OEM want to put into the car to quiet it versus some active way to do it. Given that the sensors and the speakers are installed in the car already, is there something we can just layer on with software? And that’s where the whole compute thing comes in. There’s tons of compute power now. We can do complicated functions in software that would have taken a completely separate ECU to do previously. And this really goes into this whole software-defined vehicle that you hear people talking about.

Back in the day, I would have a group that did nothing but design radios. I would have a group that did nothing but design audio systems and amplifiers. I had a group that dealt with the voice system. I had a group that dealt with noise vibration and harshness (NVH) and noise abatement. I had a group that dealt with safety. They were all off in their corners, designing things and making boxes for every one of these problems. And the boxes were very loosely coupled to each other through some CAN network. Now you have a system where there’s like eight cores running at 1.5GHz. You can do a lot with that.

Now you’re saying, “Let’s centralize all of this compute power.” Now all of these boxes have much more connectivity with each other, and we can do more things together, knowing about each other. The safety systems can respond to how the person in the car is using the vehicle. For example, how many people are in the car? Is the driver talking to somebody? Is the driver listening to loud music? This is all information that’s available in centralized so that they can take it and apply it to all the systems. So now you have these interactions between these different audio components that were all separate before.

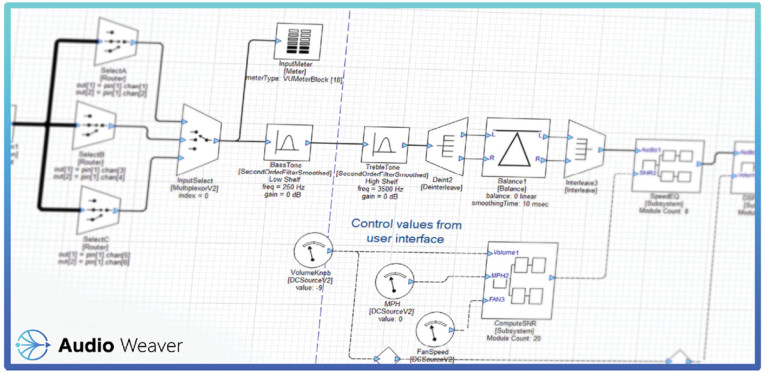

Now they’re coming together and you’ve got the compute power to do it, but now you have the problem of how do you get it done? This is all software and the software modules all need to talk to each other. That’s why you need a framework like Audio Weaver.

Instead of having to do an integration of an AVAS solution with an integration of a voice solution with a playback solution, these are all modules on Audio Weaver, all pulled together. Now in the Audio Weaver framework everybody is speaking the same language, all of the modules know how to communicate with each other. Now you can leverage from the synergies.

By knowing what different parts of the car are doing, we can make the system smarter. The computational piece is really what pulled everything together.

Levenson: What about internal Engine Sound Enhancement (ESE), chimes, and external Acoustic Vehicle Alerting Systems (AVAS) that come with EVs? How do you see that evolving?

Whitecar: The movement is toward creating soundscapes in the vehicle that are tied to the brand. We’re talking about signature-designed orchestrated systems that work harmoniously and are branded. This is an initiative that’s being worked on right now and some of the car companies actually have early versions.

Levenson: So, actually tuned with the external and internal sound of the vehicle?

Whitecar: Yes, part of the vehicle tuning. So I get into the car and I get a certain wake-up sound with chimes. The chimes all have a cohesiveness to them, they’re intuitive, and there’s a soundscape that’s created. And it’s consistent, like I know I’m in a Porsche, I know I’m in an Audi, I know I’m in a BMW. Just as iconic and identifiable as the OEM logos. This is definitely a strong trend that’s going to blow up. And that’s also coming out of the fact that I have more compute power and more ability to do a lot.

Back when I was at Ford, we would play .wav files, and you know we had 4KB of memory. We could go like “beep.” That was it! And so it wasn’t realistic. But again, the ideas have been around forever. But it’s always that you have to wait for the compute power to catch up, and to have a powerful framework available to the point that now we can feasibly implement this. So a lot of times when I hear people announcing what they think are new innovations, I think, yeah, we thought of that in 1982, but we just couldn’t do it. That’s why sometimes I get a little cranky because it’s all been thought of before! aX

This article was originally published in audioXpress, June 2022.