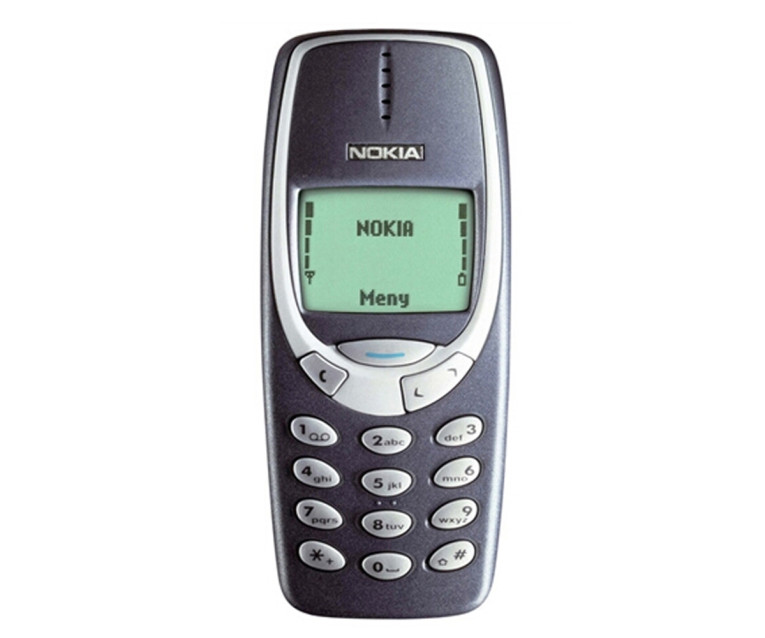

Let’s start our evolutionary journey by briefly reviewing some ancient history: The genesis of DSP is arguably in 1948, when Claude Shannon published his article, “Mathematical Theory of Communication,” which explained the key concepts of how signals are transmitted and received as digital bits. The earliest practical DSP applications in audio were in speech processing for telephony in the 1960s, and the first dedicated audio digital signal processor chip was Texas Instruments’ TMS5100, first used in its Speak & Spell in 1978.

For audio processing of music, we need to fast forward a few years, to where CD players joined the party in the 1980s, and then the MP3 players arrived a little later at the end of the 1990s. As digital audio became mainstream, DSP became increasingly important—enabling high-quality music and sophisticated processing features to become available in low-cost consumer devices.

The Classic DSP Era

In the era before smartphones, DSPs were relatively simple by today’s standards. A typical DSP device (e.g., the CEVA TeakLite family) would have an architecture that enabled it to directly access the digital audio data in memory, perform a mathematical operation on it, and write the result back to memory.

A very common operation in DSP applications is the Multiply-Accumulate (MAC), where two numbers are multiplied together, and the result added to an accumulator, or running total — the MAC must often be performed very frequently, so the processor must complete it as quickly and efficiently as possible. The TeakLite DSP provides the capability for a 16 × 16-bit MAC, with either one or two simultaneous MACs supported per device.

By performing such tasks repetitively on every sampled digital value, the DSP could encode and decode the audio signal (e.g., retrieve it from a CD), and then perform processing tasks such as filtering. The user may have noticed improved voice and speech quality, but most of the DSP activity was hidden under the hood.

The DSP device would have used simple fixed-point arithmetic, and ran at a clock frequency of likely somewhere between 50 MHz and 200 MHz — very slow by today’s standards. This meant that software had to be hand-coded in low-level assembly language to get the required performance, making programming difficult, although vendors did provide some software libraries for common tasks to make things easier.

Everything Connected

Moving on to the iPhone era, DSP processors are starting to get much more powerful, which means they can be used for increasingly more sophisticated tasks. Smartphones are taking over from MP3 players, and mainstream consumers are also starting to experience ever-higher sound quality, due to DSPs in their TVs, computers, and video games. Just as importantly, we’re moving to a connected world, with Bluetooth, Wi-Fi, and cellular everywhere, and audio streamed between multiple devices—all requiring extra processing.

Noise control for voice interaction is becoming cleverer, with beamforming techniques using DSP to combine the signal from multiple microphones in devices such as smart speakers. Speech recognition is bringing sci-fi voice interfaces to life, in the car and on our phones. If you’re watching a movie at home, you may well be listening to a 5.1 multi-channel system, and cinemas are investing in high-quality sound to keep a step ahead.

Behind all of these developments is the DSP. But as the user experience is enhanced, there’s an ever-growing need for more and more sophisticated processing. At the same time, most of these applications are in portable devices, so battery life is vital — meaning that DSP devices need to have very low power consumption to be viable. There’s a constant trade-off between features, sound quality and power usage. If users have to charge a gadget too often, they’re unlikely to care how great it is in use. In fact, engineers using DSPs often talk about devices in terms of “performance per watt,” or how much a processor can do for a given amount of power, rather than its overall performance.

These new demands meant that DSPs added more powerful number-crunching engines, and more ability to handle data in parallel, using Very Long Instruction Word (VLIW) architectures. They mostly moved to floating-point, not fixed-point, arithmetic, and clock speeds increased to around 500 MHz to 600 MHz.

For example, the CEVA-X and the CEVA-BX families provide high performance with support for up to eight 16 × 16-bit and four 32 × 32-bit MACs. Overall, these technology improvements meant that the hardware could keep up with users’ expectations, overcoming the performance limitations of older DSPs.

On the software side, it was no longer practical to program the DSPs in low-level assembly language. Instead, software engineers wrote their code in the high-level “C” language — which was not quite as efficient for the processor, but far quicker and easier to develop in. Device vendors also provided more comprehensive libraries and pre-written modules, to cut development time.

Experience and Interaction, Driven by AI

Bringing things up-to-date, today we’re more surrounded by DSP in the gadgets around us. We can consider ourselves as moving into a new “Experience and Interaction” stage, with technology in transition, and new applications appearing and becoming mainstream.

For example, smart speakers (e.g., the Amazon Echo) are in many of our homes, and rely on DSP to process the audio their microphones capture. They are also increasingly adding Artificial Intelligence (AI) capabilities, using neural networks to process our speech, and to learn how to respond.

This enables the devices to perform Natural Language Processing (NLP) — which is a jargon-heavy way of saying you can talk to a gadget like it was a person. You don’t need to remember exactly what wording Alexa needs to hear, just ask it a question and you’ll get an answer. It seems simple when you use it, but NLP requires a lot of clever DSP, and makes the user experience far better. To achieve the required performance, vendors such as CEVA now often include dedicated speech recognition engines and NLP capabilities in their DSPs that are targeted at voice applications.

We’re seeing a step up in the audio quality provided in our cars, with automakers recognizing that an audiophile music experience can be a big selling point. Reducing noise (e.g., from the road and engine) is another automotive audio application that is getting increasing attention.

Many new gadgets are also starting to become mainstream, with different degrees of success, such as smartwatches, virtual reality (VR) glasses, and hearables that add smart features such as 3D audio and sound sensing.

All of these new capabilities and devices rely on DSP for audio processing, and in many cases, this means DSP devices need to be extremely high performance, while still keeping power consumption to a minimum. With more and more features expected of our gadgets, there’s always pressure to do more with less power—both to extend battery life, and to allow a smaller battery to be used in space-constrained portable products.

Weight, size, and time between charges are important differentiators for consumers, so manufacturers need the right DSP to stay competitive. Processing is also starting to be handled more and more by edge devices, such as the Echo speaker, rather than data being sent to the cloud — thus improving security, removing latency and eliminating the need for an ever-present Internet connection.

On the other hand, many consumer products will now include a DSP “core” as part of a larger silicon chip — companies such as CEVA are developing power-efficient DSPs that can be integrated into other chips, which drives cost and complexity down. The high performance needed by many applications can be provided by “vector processing” DSPs, which act on multiple pieces of data at the same time to increase the throughput of the processor.

Clock speeds have now reached 1 GHz or faster, and multiple processing capabilities are combined in a single device. For example, the CEVA SensPro is a vector DSP, which also includes scalar components, as well as support for neural networks.

This means it can handle huge amounts of data from real-world sensors, and has the flexibility needed to meet the requirements of diverse applications. Software has become more complex, with new support needed to configure neural networks. For example, a modern DSP such as the SensPro is shipped with software development tools—including a compiler, a debugger, and a real-time operating system (RTOS), as well as framework support and compute libraries for neural networks.

Conclusions

Whatever your view of digital audio vs. analog, it’s fair to say that the DSP has transformed our audio world for the better. With new applications and products appearing all the time, the future will only bring more demand for DSPs — stretching their hardware and software capabilities to the limit to deliver great performance from tiny amounts of power, and in particular, employing AI to improve the user’s experience.

We’ve come a long way with DSP, but there’s no limit in sight as to the technology’s capability. It’s going to be an interesting journey. aX

This article was originally published in audioXpress, November 2020.

About the Author

About the AuthorYouval Nachum serves as CEVA’s Senior Product Marketing Manager for the company’s audio and voice product line. Nachum brings more than 20 years of multi-disciplinary experience, spanning marketing, system architecture, ASIC, and software domains at leading technology companies. He is passionate about anticipating long-term trends and leading technical programs to their successful completion. He is highly proficient in combining market requirements, product definitions, industry standards, and design innovations into breakthrough products. Nachum holds a B.Sc. and M.Sc. in Electrical Engineering from the Technion – Israel Institute of Technology.