This is challenging due to the wide variety of environments where audio is captured — from an acoustically challenged bare-walled reverberant conference room to a bustling café to a windy outdoor scene. By arranging multiple microphones in specifically chosen microphone arrays and using digital processing algorithms, system designers have been able to increase directionality and the system level signal-to-noise ratio (SNR) of the audio capture over single microphone implementations.

This has created a superior user experience — being able to focus on a conversation or audio playback without the distraction of added noise. Efforts for further improvements have continued toward a more immersive user listening experience even in the most challenging and complicated environments such as capturing someone speaking on a busy train, a soft voice at the opposite end of a large conference room, or even an adaptive 3D spatial sound in AR/VR applications. In some cases, this requires the microphone array to perform as well or better than the users’ own ears if they were there in person (Figure 1).

Many challenges lie in the way of this goal of reaching a wide frequency range, high directivity, and low self-noise microphone array.

Optical MEMS Microphones

Until now the choice of microphone for arrays has been between the capacitive micro-electromechanical systems (MEMS) microphone and electret condenser microphone (ECM). For mainstream consumer products, capacitive MEMS microphones by far have been the most common choice due to their compact size, manufacturability, device consistency, and on-chip digital output. However, capacitive MEMS microphones have reached the limits of performance, specifically SNR, resulting in the self-noise of the MEMS microphone becoming the limiting factor of the array.

ECMs (in medium to large capsule form) have offered a higher SNR alternative, however they suffer from poor device-to-device consistency and typically have an analog output requiring a discrete analog-to-digital converter (DAC). In certain applications, such as research or instrumentation grade arrays, system designers have implemented cumbersome workarounds to accommodate ECMs: hand soldering every microphone in the array, carrying out initial calibration and subsequent periodic calibrations to ensure matching, and using expensive ANCs to capture high-quality digital signals for processing.

For higher volume products these workarounds aren’t practical, so ECM-based arrays aren’t a scalable solution. The ideal microphone for a beamforming array should have all the required attributes—compact size, manufacturability, device consistency, digital output, and high SNR.

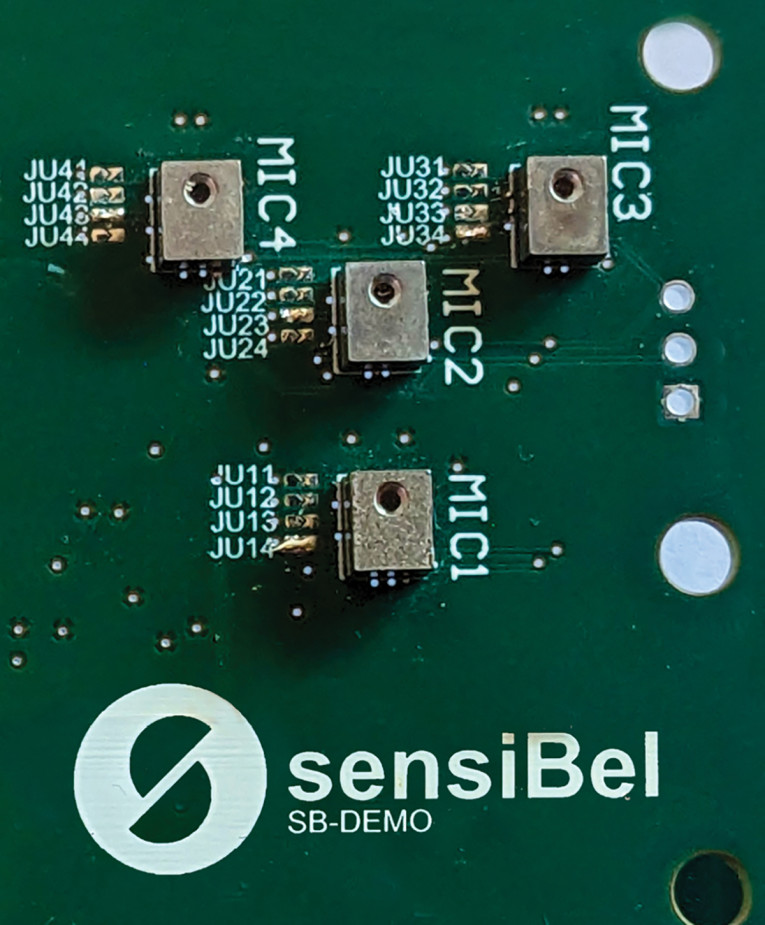

sensiBel, a Norwegian company developing a new generation of MEMS microphones using patented optical technology based on years of research at SINTEF, one of Europe’s largest independent research organizations, has created such a MEMS microphone. The company’s first optical MEMS microphone delivers 80dBA SNR and 146dB SPL AOP in a MEMS package with a digital output of PDM, I2S, or TDM. In addition to the very-high performance, it can scale to high count microphone systems due to its SMT reflow compatibility and consistent and stable device-to-device sensitivity and phase matching. sensiBel’s 24-bit digital output also facilitates the 132dB dynamic range of the microphone allowing detection of far-field low level signals and the loudest near-field signals.

The TDM8 format offers a very scalable array solution where up to eight microphones can share the same digital signal line, for easy integration of large microphone count arrays.

Achieving Directionality

MEMS microphones are typically omnidirectional, meaning that they pick up sounds equally from all directions. Omnidirectional MEMS microphones can easily support directionality at the system level by using two or more microphones. The spatial separation of the microphones creates different delays between the sound source and each microphone, which can be processed to create a directional signal toward the sound source. This forms the basis of a beamforming array.

MEMS microphones can individually be made directional by the addition of a second acoustic port in the package. At first look, this may seem like a favorable alternative to using multiple omnidirectional MEMS microphones in a beamforming array, however directional MEMS microphones have remained somewhat of a niche in the industry due to a few continual advantages of the omnidirectional MEMS microphone array solution:

The omnidirectional microphone array achieves directionality in the post-processing of the signal, rather than purely through the mechanical implementation of a directional MEMS microphone. This provides a more flexible solution, particularly for the industrial design. The microphone array beamforming solution can be adapted using variable gains and delays while a directional microphone sets the beamforming pattern based on the proportional length of front and back acoustic ports. Higher count microphone arrays can even track the source of the signal [1].

Wind noise creates a large pressure gradient across the sensor of a directional MEMS microphone. The microphone array has the ability to detect wind noise and go back to omnidirectional output, which allows much more headroom to avoid overload. Even for non-wind noise situations the microphone array can temporarily return to being omnidirectional for context awareness or non-directional application use cases. Very high SNR omnidirectional MEMS microphones enable the greatest configurability, flexibility and best performance in beamforming arrays (Figure 2).

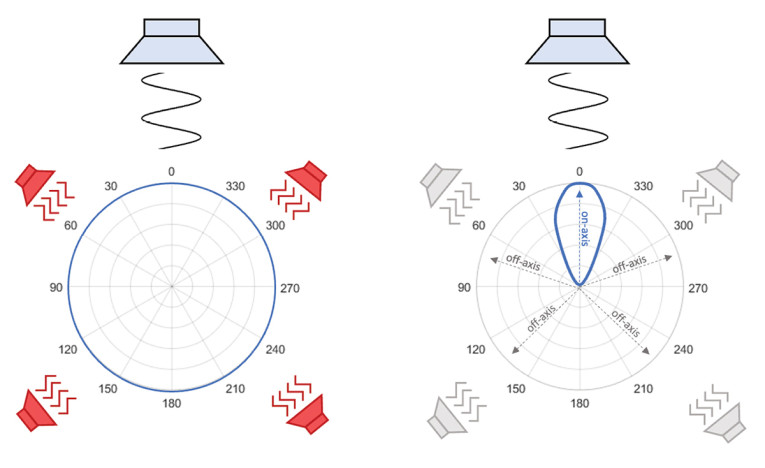

A polar plot is used to illustrate the directionality of a microphone or microphone system. Figure 3 shows the plot of an omnidirectional response (the microphone has the same sensitivity response from all directions) on the left, while a directional response is shown on the right. The omnidirectional response indiscriminately picks up sounds from any direction, in this case both the desired signal at the front and noise sources at the rear. The directional response picks up the desired sound at the front but rejects or at least significantly attenuates the noise sources at the rear.

The directional response example is a somewhat idealistic directional pattern where the front on-axis (0 degree) response is just wide enough to capture the desired signal and there is consistently high attenuation elsewhere. In simple beamformers — where a small number of microphones and a simple algorithm is deployed, the pattern is less ideal than this and off-axis attenuation won’t be as consistently high, with a more gradual off-axis attenuation increasing to maximum attenuation at an angle where the response is null. A uniformly high level of rejection and greater directionality can be accomplished using higher order beamforming systems, requiring higher levels of signal processing. The performance of such beamforming systems is significantly improved using very high SNR microphones.

Beamforming Algorithms

Directionality is achieved through signal processing of multiple microphones typically on a digital signal processor (DSP) or system-on-a-chip (SoC). This processing fundamentally pertains to the following beamforming algorithms:

- Delay-and-Sum

- Differential

- Minimum-Variance Distortionless Response (MVDR)

The first two algorithms, Delay-and-Sum and Differential, are examples of simple algorithms that have a limited set of configurable parameters. They also have simple computation methods with straightforward implementation [2].

Other more complex algorithms take into account the environmental conditions to create more optimal beamforming solutions for specific applications and are typically referred to as adaptive beamformers.

The third algorithm listed, MVDR, is an example of an adaptive beamformer that takes significant advantage of an 80dB SNR microphone. Other beamformers include linear constraint minimum variance (LCMV), generalized sidelobe canceler (GSC), or Frost beamformer [2]. We will focus on the listed three algorithms.

Delay-And-Sum — a Simple Algorithm with Limited Directivity

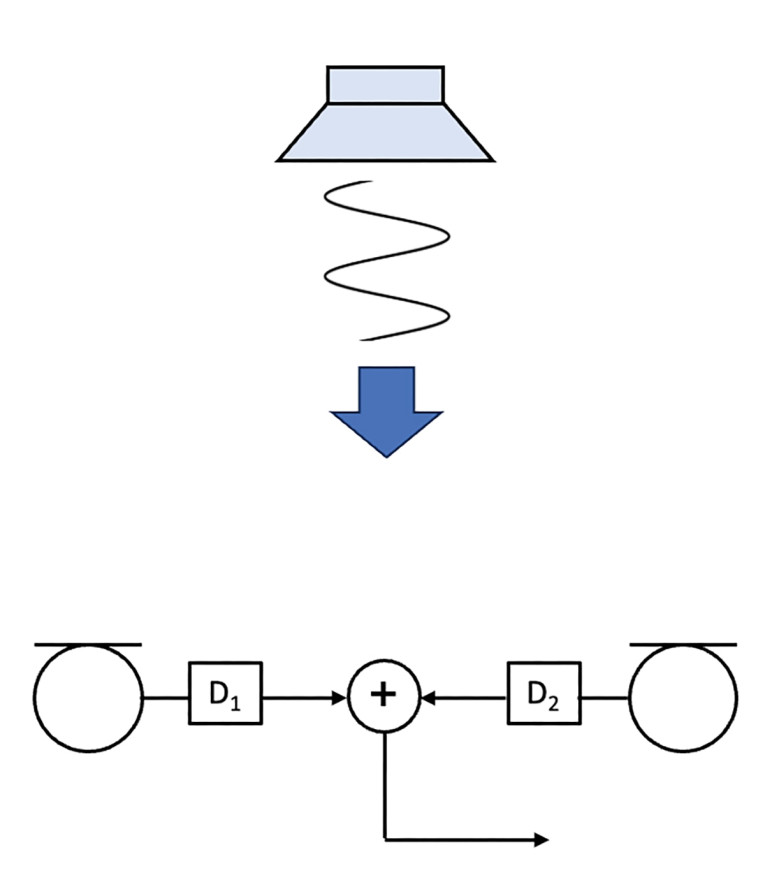

Delay-and-Sum beamforming — sometimes also called phased array processing — is where two or more microphone outputs are assigned individual delays, and then summed together to steer the beam toward a desired signal of interest (on-axis response). This is typically implemented in a broadside linear array where the desired signal is perpendicular to the array (0-degree position) as shown in Figure 4.

Signals at the front (0 degrees) and rear (180 degrees) of the array are phase aligned and gained, while signals to the side are attenuated since the signals are not in phase. The addition of a delay steers the on-axis response away from the line perpendicular to the microphones, and the delay can even be increased to the extent that it can rotate the on-axis response in line with the array to form a Delay-and-Sum endfire.

The advantage of Delay-and-Sum beamforming is that the on-axis frequency response is flat and does not require equalization, however the off-axis attenuation is very frequency dependent and can only be optimized at specific frequencies (where the half wavelength corresponds to the microphone spacing).

Above that frequency, spatial aliasing occur, which is where lobes are created in addition to the on-axis response and the rejection can become unpredictable, while below that frequency there will be little attenuation of the signal [1].

This may be improved by adding more microphones to the array, but the increased size introduces aliasing at even lower frequencies. The directional response of Delay-and-Sum is also symmetric, so there will be no attenuation 180 degrees to the on-axis.

Differential — More Directive but EQ Required

Differential beamforming is where the difference in the signals of two or more microphones is used to form directionality. It is typically arranged in an endfire formation in the direction of the desired signal to create a spatial difference of each microphone relative to the source (see Figure 5). In a two-microphone differential endfire array the rear microphone signal is subtracted from the front microphone signal.

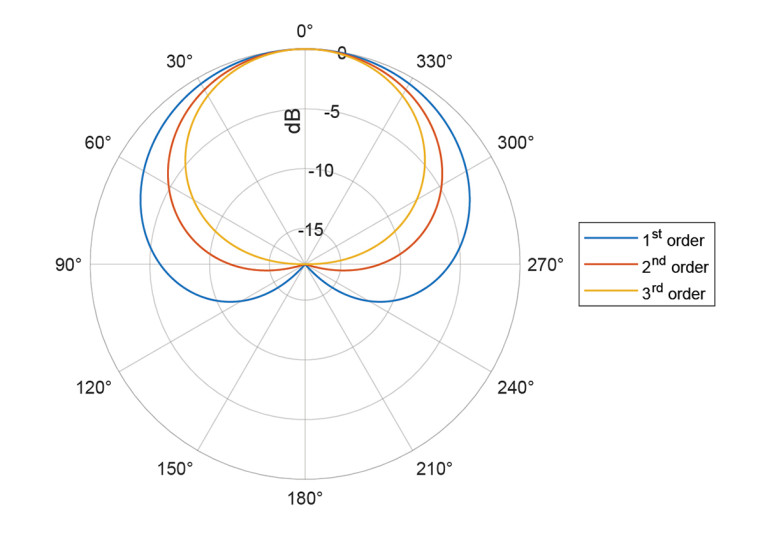

This has the effect of rejecting sounds from the sides and behind the array. A cardioid pattern (shown in Figure 6) can be formed by adding a delay to the “rear” microphone signal, which corresponds to acoustic delay between the microphones for their given spacing. This is a classic pickup pattern used to achieve very high off-axis rejection, over 30dB directly at the rear 180-degree position and achieve a moderate 6dB rejection at the sides,

90 and 270 degrees. The side rejection can be increased to 12dB and 18dB by using second- and third-order implementations, respectively.

This high off-axis rejection is a huge advantage to differential beamforming. It does so without adding any spatial aliasing below the first null of the signal giving a predictable frequency response. The frequency response does have a first-order high-pass filter characteristic of 6dB/octave, which needs to be equalized. This introduces a trade-off between signal bandwidth and White Noise Gain, which is discussed in the differential beamforming section of this article.

By using an 80dB SNR microphone less noise is introduced in this trade-off allowing a wide bandwidth, flat frequency response along with high off-axis rejection and better directivity at lower frequencies.

Considering a small two-element conferencing microphone array with either architecture, the Delay-and-Sum broadside array is implemented with a 50mm microphone spacing, and the differential endfire array is implemented with a 21mm spacing.

From the polar patterns (Figure 6), we can see the differences in directionality where the differential architecture performs more favorably, particularly at low frequencies. The frequency response (Figure 7) shows the advantage of Delay-and-Sum, giving a naturally flat response. But the high-pass filter nature of differential beamforming can be equalized, particularly when using an 80dB SNR microphone.

A small two-element conferencing microphone array with Delay-and-Sum or differential can be easily implemented in many real-world applications. Delay-and-Sum can be implemented using the communication microphones on either side of a laptop webcam and form an effective broadside array pointing at the user predominantly directly in front of the camera, while providing attenuation to environmental sounds to the side.

A differential endfire array can be implemented inside a USB podcast microphone, where the user speaks to the front side and the high directivity can reject reverberations and other room noise to creating a smooth professional audio capture. It can also easily adapt the beamforming to create

figure 8 pattern for “interview mode” when the delay is removed (Figure 8).

The Value of High SNR Microphones for Delay-And-Sum Beamforming

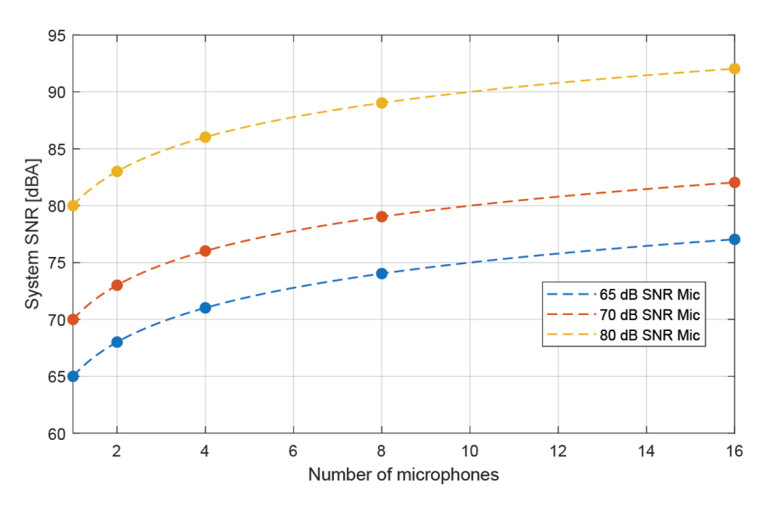

Delay-and-Sum processing is more favorable than differential beamforming with respect to the on-axis output signal since it produces a flat response and a net increase in system SNR. These benefits trade off with directivity, which is much lower than differential processing and off-axis rejection levels are more dependent on frequency and precise angle of arrival. The SNR improvement is attractive since it is easily realized—the raw output of a two-microphone Delay-and-Sum beamformer results in a 3dB increase of the system SNR due to the net effect of adding coherent signals, +6dB, minus the incoherent noise increase, +3dB. This +3dB improvement continues for every doubling in number of microphones used.

This effect often means Delay-and-Sum is employed as a pure microphone SNR increase for the system. An 80dB SNR MEMS microphone already has a greater than 10dB SNR advantage over other capacitive MEMS microphones, meaning that less microphones — a factor of 8x less — can be used to achieve the same system level SNR while reducing system complexity and processing. Alternatively, the same number of microphones may be retained, particularly for composite or nested arrays, which maintain on-axis beamwidth across frequency, in which case the absolute SNR of the system will be significantly higher using 80dB SNR MEMS microphones (Figure 9).

The Value of High SNR Microphones in Differential Beamforming

Differential beamforming by nature generates a high-pass filter response due to the diminishing differences in sound pressure between each microphone as we approach low frequencies as shown earlier in Figure 7.

This high-pass response needs to be equalized with a low-pass or low-shelf filter to restore a flat response. In doing so both the response and self-noise are gained equally, but unlike the response the self-noise is not starting from attenuated state, so the net effect of differential processing is an increase in self-noise or what is commonly referred to as white noise gain.

This is particularly evident at low frequencies where the gain is the highest. Additionally, even the frequencies without gain see an increase due to the subtraction of uncorrelated noise sources, which for two microphones amounts to +3dB. Avoiding added noise after EQ means either reducing the low-frequency attenuation or reducing the starting self-noise of the system (microphone self-noise), as shown in Figure 10.

Differential Beamforming Low-Frequency Attenuation vs. Directionality and Bandwidth

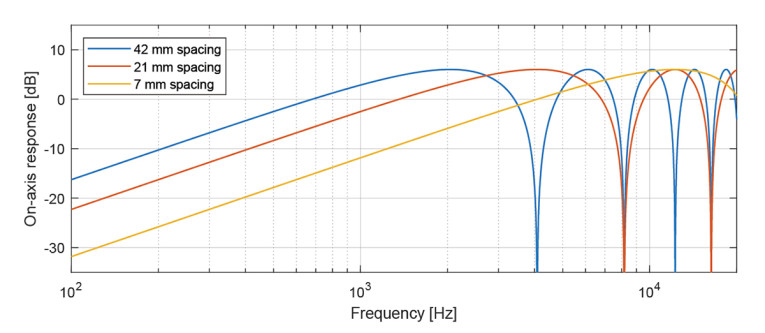

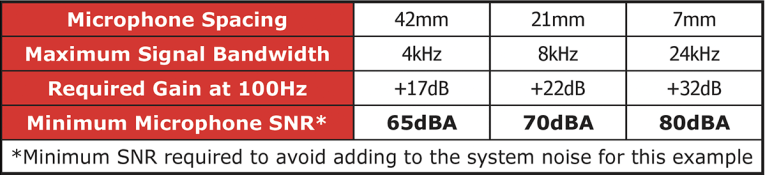

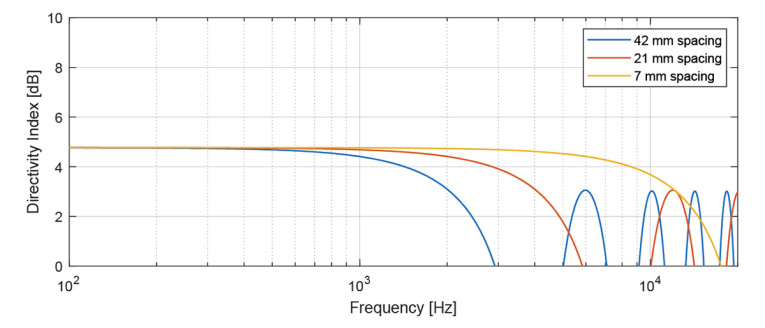

The amount of low-frequency attenuation is determined by two factors — microphone spacing and the order of beamforming. Increased microphone spacing will decrease low-frequency attenuation of the signal, since adjacent microphones will see a greater difference in sound pressure. However, this comes at a cost — the frequency where on-axis signals cancel each other out (null frequency) will be lower. This occurs where the distance between microphones is the same as the half wavelength of that frequency, for 21mm spacing this would be approximately 8kHz. Since it is very difficult to equalize the series of nulls and maxima that occur above that frequency, the first null typically defines the usable bandwidth of the signal.

Maxima frequencies above the first null also suffer from spatial aliasing so the cardioid pattern is lost. Increasing the distance to 42mm for example increases the level of signal at 100Hz by 10dB but decreases the usable bandwidth to 4kHz, as shown in Figure 11.

A compact microphone array supporting 24kHz bandwidth can be implemented using an 80dB SNR microphone. Table 1 shows the required gain to each spacing for first-order differential beamformers. This gain correlates with an SNR requirement for each spacing. Only an 80dB SNR microphone can meet requirements for all microphone spacings, in particular, meeting the requirement for 7mm spacing, which produces full audio bandwidth. Increasing the order of beamforming is desirable to achieve higher directionality. By creating a second- or third-order array the cardioid pattern becomes tighter, increasing rejection from 6dB at 90 degrees and 270 degrees to 12dB and 18dB, respectively (Figure 12).

This also comes at a cost since the order of the filtering also increases with the slope of the high-pass response increasing from 6dB/octave (second order) to 18dB/octave (third order) as shown in Figure 13. This exacerbates the white noise gain mentioned using a first-order system, and the sensitivity to microphone self-noise is much greater. Superior system level noise performance in a higher-order array is realized using an 80dB SNR MEMS microphone.

Directivity Index — Evaluating the Overall Array Performance

A polar plot is helpful to investigate the array response for a single frequency. However, to investigate how the array performs over the full frequency range of interest, it is better to reduce the directivity to a single number called the Directivity Index (DI). This number can be calculated for each frequency to understand how directional the array is at various frequencies.

Figure 14 shows the DI for the two two-element microphone arrays under consideration. The Delay-and-Sum array has no directivity at low frequencies, as can also be seen in the 500Hz polar plot from Figure 6. The directivity then increases for high frequencies, where the wavelength of the sound is comparable or smaller than the microphone spacing. A maximum directivity occurs at 4kHz where the spacing is slightly more than half a wavelength.

In Figure 15 the effect of reducing the microphone spacing in the differential array is shown. It mirrors the trend shown in Figure 11’s uncompensated frequency response, where the usable bandwidth increases when the microphone spacing is decreased. Above the first null the directionality goes negative several times inside the audio band for the 21mm and 42mm examples. However, with a 7mm spacing one can obtain high directivity all the way up to 10kHz and stays positive up to 18kHz. Due to the required EQ, this 7mm spacing is only achievable with an 80dB SNR microphone.

MVDR — Example of an Adaptive and Data-Driven Beamforming Algorithm

While the Delay-and-Sum and differential beamformers are easy to implement with simple signal processing building blocks, they do not provide an optimum solution in many cases. The application might require different beamformers depending on the acoustic environment. This can be to steer the beam in different directions and suppress interferences from certain other directions. Adaptive, data-driven beamformers aim to solve these problems by optimizing the beamformer using the signals coming from the microphones.

MVDR is such an algorithm where individual amplification and delay are adapted for each element in the array to preserve the sensitivity in the direction of the desired signal while maximizing the attenuation of other interfering signals. In fact, the algorithm attempts to minimize the total array output signal, which includes acoustic interference, noise, and microphone self-noise.

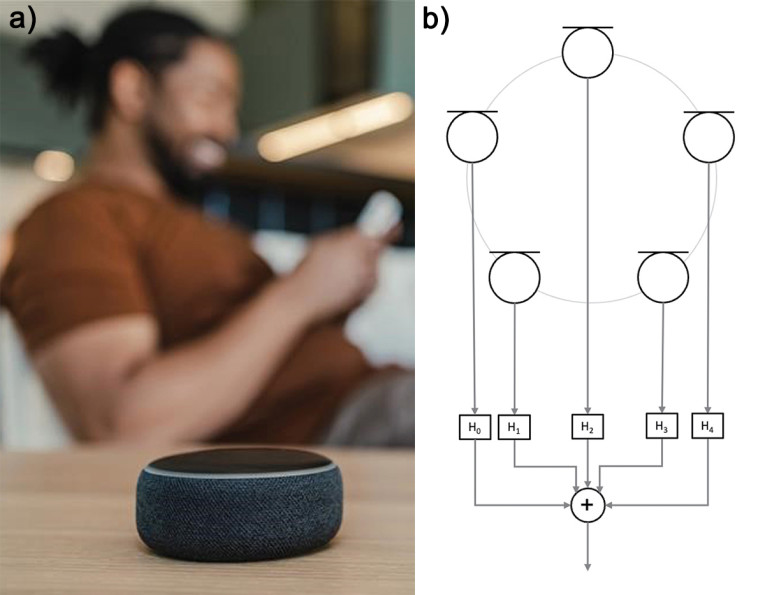

The individual amplification and delay are typically applied for each frequency, which results in a digital filter for each microphone signal, H in Figure 16. This can be implemented as a finite impulse response (FIR) filter or in frequency-domain processing.

Figure 16’s example of a five-microphone circular array could be used in a smart speaker. The advantage of such a geometry is that the array will have equal performance in 360 degrees, compared to an endfire or broadside array that is targeted toward a particular direction.

The Value of High SNR Microphones for MVDR Beamforming

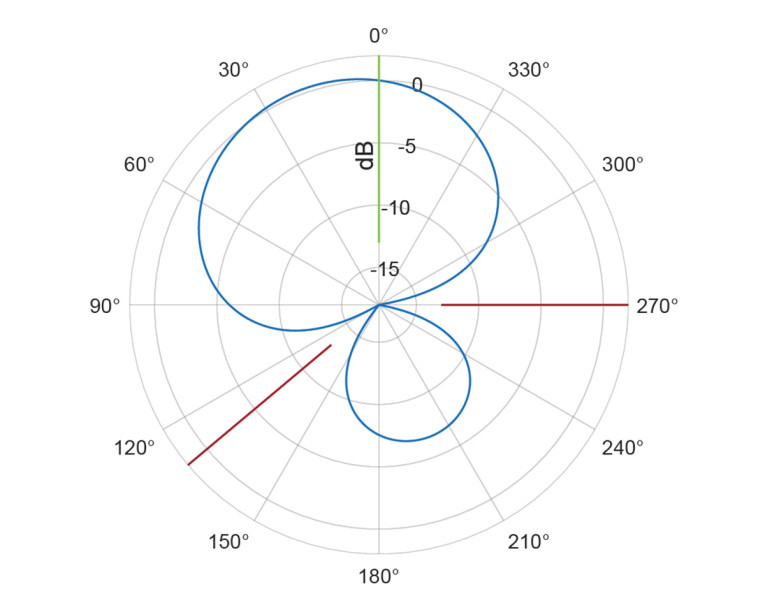

In MVDR beamforming the direction of both the desired signal and the interfering noise need to be known upfront or established during operation using signal processing and statistics. The advantage of this method is that high attenuation nulls can be steered in the direction(s) of interference(s), and given the correct constraints, the algorithm will create an optimum directional response.

The output of the processing has the same traits as Delay-and-Sum — a flat frequency response in the direction of interest and a net increase in SNR, but with a higher directivity. The direction of the desired signal is typically identified with a separate Direction-of-Arrival algorithm and then the spatial properties of the noise sources are identified. This information is then used to optimize the filters to create nulls in the direction of the noise sources, as well as suppress diffuse reverberation noise in the room (Figure 17).

Adaptive, data-driven beamformers are susceptible to errors in the Direction-of-Arrival estimation, as well as inadvertent subtraction of desired signal reflections. Thus, practical design of the algorithms must consider a certain amount of microphone self-noise.

An 80dB SNR microphone helps — the lower noise floor means the true environmental noise levels and point sources can be more accurately detected. In addition, the MVDR algorithm can work more aggressively to achieve a higher directivity when used with an 80dB SNR microphone. An example of this has been simulated in Figure 18 comparing an 80dB SNR MEMS microphone to a 70dB MEMS microphone.

In Figure 19, the DI is shown versus frequency for the two microphones with different SNR. In the frequency range of 200Hz to 2kHz (which is in the center of the frequency range of the human voice), there is up to 3.5dB better directivity due to the improved microphone SNR. This means that more reverberation and interfering signals will be removed, especially in the rear direction of the array.

The benefit of MVDR using an 80dB SNR microphone is increased directivity without introducing perceivable microphone self-noise. A high SNR MVDR beamformer can be applied to a wide variety of dynamic applications and get significant rejection to noise sources. It provides a higher directionality alternative to Delay-and-Sum and a more adaptive alternative to differential arrays.

Beyond Beamforming — Further System Processing

Beamforming is one part of a fully optimized audio capture system, it is often complemented by acoustic echo cancellation (AEC), noise suppression and adaptive interference cancellation [3]. Beamforming is one of the most important parts — by having a high performance beamformer the subsequent processing blocks don’t have to work as hard. For example with AEC, a highly directive beamformer before the AEC block can reduce the amount of signal it has to cancel, allowing it to do a better job and avoid aggressive processing, which can result in half duplex communication or loss of double talk in a conferencing system.

For noise suppression high-performance beamforming reduces amount of the interfering noise or system self-noise in the incoming signal, avoiding use of overly aggressive processing like noise gating, which can make voice communications sound very unnatural [4].

Increasingly, Artificial Intelligence (AI) is being used in audio processing, in particular Machine Learning (ML)-optimized algorithms for beamforming and noise suppression can increase their performance. An 80dB SNR microphone enables better quality of data for the ML optimization since there will be more margin between the self-noise and the environmental noise.

Conclusion

Using an 80dB SNR MEMS microphone in three common beamforming algorithms, system performance can be significantly improved with higher directivity, higher bandwidth, and higher SNR. Delay-and-Sum beamformers achieve higher system SNR with less microphones avoiding processing complications and artifacts when using a higher count of microphones in that system.

Differential beamforming can implement tighter spacing between microphones, which increases the usable bandwidth of the signal and with less white noise gain, meaning higher system SNR. MVDR and other algorithms can better optimize the beamforming parameters and generate more directionality for a higher frequency range (Table 2).

System processing features such as AEC, noise suppression, and adaptive interference cancelling benefit from a beamformed signal since it provides a better margin between the desired signal and the interference. This allows a better audio signal without excessively aggressive processing, which can lead to an unnatural sounding capture.

Combining superior microphone array beamforming with these advanced system processing features allows clearer voice calls, superior audio recordings, more productive conference calls and more immersive 3D AR/VR audio experiences. sensiBel’s 80dB SNR SBM100 series brings this level of performance to MEMS microphones and to beamforming arrays for the first time. For more information, visit sensibel.com. aX

References

[1] M. Suvanto, The MEMS Microphone Book, Kangasala, Finland: Mosomic Oy, 2021.

[2] “Beamforming Overview,” MathWorks, MATLAB. (n.d.), www.mathworks.com/help/phased/ug/beamforming-concepts.html

[3] “Qualcomm QCS400 Smart Speaker/Sound Bar,” DSP Concepts,

https://w.dspconcepts.com/reference-designs/qualcomm-qcs400-smart-speaker-soundbar

[4] “Acoustic Echo Cancellation,” Texas Instruments Video Library. Texas Instruments Precision Labs Audio Series, 2022, www.ti.com/video/6308400085112

About the Authors

Michael Tuttle is the Applications Engineering lead at sensiBel. He has 20 years industry experience with 15 of them specializing in MEMS microphones with roles in quality, production development, and applications. He has worked for many of the industry technology leaders in Analog Devices, Invensense, Vesper, TDK, and now sensiBel. He has a master’s degree in Microelectronic Engineering from University College Cork, Ireland, and has co-authored patents on MEMS microphone technology.

Michael Tuttle is the Applications Engineering lead at sensiBel. He has 20 years industry experience with 15 of them specializing in MEMS microphones with roles in quality, production development, and applications. He has worked for many of the industry technology leaders in Analog Devices, Invensense, Vesper, TDK, and now sensiBel. He has a master’s degree in Microelectronic Engineering from University College Cork, Ireland, and has co-authored patents on MEMS microphone technology. Jakob Vennerød is the co-founder and Head of Product Development at the Norwegian MEMS startup, sensiBel. Previously he worked on several research projects in audio and acoustics at SINTEF. He has extensive experience in acoustics, electronics, and signal processing, particularly in audio technology. He has a master’s degree in Electronic Engineering and Acoustics at the Norwegian University of Science and Technology, and has coauthored several patents related to microphone technology.

Jakob Vennerød is the co-founder and Head of Product Development at the Norwegian MEMS startup, sensiBel. Previously he worked on several research projects in audio and acoustics at SINTEF. He has extensive experience in acoustics, electronics, and signal processing, particularly in audio technology. He has a master’s degree in Electronic Engineering and Acoustics at the Norwegian University of Science and Technology, and has coauthored several patents related to microphone technology.This article was originally published in audioXpress, April 2024