There were multiple examples of those surprises at this event, which are not relevant directly for a publication like audioXpress. But Apple did unveil a new A14 Bionic processor that offers a massive boost in performance and is the industry's first 5 nanometer chip manufactured by Taiwan Semiconductors Manufacturing Co (TSMC). This one, features 11.8 billion transistors, a new six-core design, four-core graphics architecture, and a new 16-core Neural Engine that is twice as fast, and capable of performing up to 11 trillion operations per second, taking machine learning apps to a whole new level. I'm only scratching the surface here, but I know that this announcement left a lot of company engineers and executives also scratching their heads.

But the real curveball for me was once again the Apple Watch, which continues to be Apple's Trojan horse for a lot of new technology. The new Apple Watch Series 6 is not only a stunning piece of technology, but it adds breakthrough capabilities, such as a blood oxygen sensor, that shocked a lot of companies. Like the existing ECG feature, it just works and fits into that much thought-after category of "essentials." Clearly the Apple Watch just keeps adding those.

This is a perfectly legitimate business in itself, and some of those technology and component companies are also Apple suppliers. But the "follow the leader" spirit that exists particularly in consumer electronics - instead of innovation - is sometimes disheartening.

The Wearables Vision

The Apple Watch is the absolute market leader in the wearables category. It is by far the most powerful wearable computer platform there is (and it's getting better every year). It is the largest undisclosed revenue generator for Apple, strongly contributing to the company's ecosystem consolidation, and also one of the most promising platforms for future growth in multiple application segments. And yet, surprisingly, even those technology companies that want to copy Apple, seem to be unaware of its potential and what it can do.

I received recently a new market report from a company that I rarely quote (because they have proven to be unreliable so many times), precisely discussing the worldwide Wearables market forecast. International Data Corporation (IDC), admits that global shipments of wearable devices are expected to grow 15% this year (we need to ignore the units figures because Apple doesn't disclose those and IDC has no idea).

The reason why I mentioned the IDC report is because it defines the wearables category to include "hearables, watches, and wristbands," which just serves to show how poorly the Apple Watch is perceived. IDC also forecasts a five-year compound annual growth rate (CAGR) of 12.4% over the next four years (until 2024). I know Apple would be extremely disappointed with that forecast.

Every year, depending on what Apple reveals in its regular OS updates, the Apple Watch is a health and fitness product, a safety and communication terminal, a personal entertainment device, or a useless fashion-tech gadget, it all depends. All the powerful technology embedded in the device remains misunderstood. The Apple Watch offers more natural language and AI processing power that any smart speaker available today. The array of sensors and technology interfaces combined in the product show more promise for new disruptive applications than all the technology demonstrated until today by all the "virtual reality" companies combined.

It was only called a Watch, because Apple learned from past mistakes that calling it a "wrist computer" would not work. Like the iPhone is not the "pocket computer" or "handheld assistant" that the Newton once tried to be ahead of time. People understand a watch and they buy watches (many costing multiple times the price of an Apple Watch).

For me, the best feature of the Apple Watch is that I can use it as a contactless remote control and wireless access key. I use it to control the music I'm listening too, and it magically wakes up and unlocks my Mac when I want to work. The vision realized of using Ultra-wideband (UWB) and Bluetooth to access the subway, open and start my car, or activate a parking token, apart from making payments, is something of enormous value. But all would be impossible to sell by itself to consumers, since those are all add-on features to a device that sells because it tells time.

Specifically in the audio industry, we are discussing how we can untie the features offered by true wireless earbuds from those of the smartphones we have in our pockets. Ironically, the hearing aid brands only recently started promoting the possibilities of connecting a smartphone with their medical devices, highlighting the added communication and entertainment possibilities. Meanwhile, many companies are working intensely to make edge-computing and on-device processing powerful enough to support a personal voice assistant, and make augmented hearing a reality - enabling the first true hearables.

We still rely mostly on the smartphone for those vital connections to the cloud, voice engines, extra signal processing, and communications, but at its September 15 event, Apple also unveiled a decoupling of its Apple Watch from the iPhone. The new Family Setup feature in watchOS 7, allows the communication, health, fitness, and safety features of the Apple Watch to be available to kids and older family members without an iPhone. For now, an Apple Watch can be set up through a parent's iPhone, so kids can connect with family and friends. But they can also use their Watches to enjoy listening to music, streaming to their earbuds of course...

So, it's not about what wearables can replace, but what they can add to our lives that we don't even dream about. We can think about translation as an example. Hearables, by themselves will struggle to support the sophisticated operations required for language translation, but a device on our wrists will be an ideal extension - with the added value of a display for voice to text. And it will just get better with every update.

As it was announced during Apple's annual Worldwide Developers Conference back in June 2020, among all the spectacular updates to all the company's operating systems (iOS 14, iPadOS 14, watchOS 7, and tvOS 14), Apple discussed a new firmware update that would add spatial audio support for the company's AirPods Pro.

Apple also confirmed then that, with the updates, AirPods and some Beats TWS products would gain automatic Bluetooth device switching and many other new updated features. Thanks to updates in iOS 14 and iPadOS 14, those products now feature Headphone Accommodations (part of the Accessibility features), which amplifies soft sounds and tunes audio to help music, movies, phone calls, and podcasts sound crisper and clearer. In the AirPods Pro, this accessibility feature is also implemented when the Transparency mode is active, helping users to better recognize important sounds and dialog. As many noticed, this meant that an extremely useful hearing enhancing feature would be added for free, with a simple software update.

These products all use Apple's own H1 system-in-package (SiP), which allows software updates and the introduction of new valuable features. And this sends another powerful message to an industry that is used to seeing products become obsolete after as little as 6 months (and what's that about having an OS in your earbuds?...)

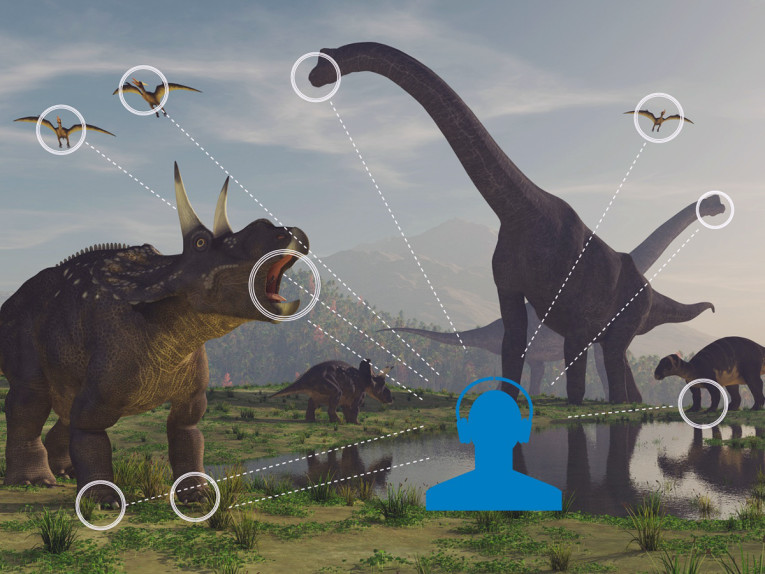

But the most sensational of the announcements was the revelation that AirPods Pro would gain spatial audio support with dynamic head tracking for an immersive theatrical experience when watching movies, TV and any content with surround 5.1, 7.1 and Dolby Atmos or other object-based formats. As the industry knows too well, introducing a convincing binaural rendering of multichannel or complex object-based audio sources is not an easy achievement, and until now required things like external hardware support (normally a dongle) or a dedicated full-featured preamp with DSP, plus extra hardware with sensors for head-tracking, plus a powerful computer to process all the complex calculations required. And until now, this was normally done with large over-ear headphones, and not in true wireless earbuds.

Well, a few days ago, Apple did release the promised firmware update and suddenly, the spatial audio support in the AirPods Pro was a reality. And what an update! As everyone realized soon enough, the results are simply spectacular and very noticeable while watching movies with good soundtracks. Like in a movie theater, we can turn our heads around from the screen and the location of the sound sources remain in place, while the immersive impression is simply stunning. I still have to try this with spatial audio music mixes, but in general I remain very skeptical due to past experiences with that type of content.

Specifically designed for headphones, the Apple H1 chip was first used in the 2019 version of AirPods, it has Bluetooth 5.0, supports hands-free "Hey Siri" commands, and offers 30 percent lower latency compared to the W1 chip in the earlier version of AirPods. But the AirPods Pro are also able to coordinate the binaural rendering of those complex signals according to the user's position. For that, Apple uses the accelerometers and gyroscopes in the AirPods Pro to track the motion of the user's head. In this way, each sound cue remains anchored to the device and a center channel remains in the front, even when the user turns his head around. And even if the user moves the device while watching the movie, like an iPad, the system tracks the position of the user's head relative to the screen. The system is able to understand how they are moving in relation to each other and maintain the aural cues anchored to its positions.

As I wrote back in June, we only have to hope that Apple also confirms support for MPEG-H audio, and eventually support for loading HRTF profiles, for complete personalization. But I suspect Apple intends to explore this for many other augmented audio possibilities, as it is becoming obvious to many developers who noticed the platform's potential.

I recommend our readers watch the demonstration videos from Anastasia Devana (a former Audio Director at Magic Leap) and a specialist in spatial audio that quickly created HeadphoneMotion, a plug-in for Unity 3d that exposes Apple's Headphone Motion API (CMHeadphoneManager) in Unity. As she describes it: "You can use this to get head tracking data from Apple headphones like AirPods Pro into your Unity scenes." Anastasia made the plug-in using the 3DoF data, available on GitHub and you can check her demonstration video here.

Taking the concept further, developer Zack Qattan, combined the iPhone 11's Nearby Interaction framework that uses UWB, with the AirPods Pro's head tracking feature, and created a solution to call each other and spatialize each others' voices based on their relative locations and what direction they are facing. See the demonstration here. And watch also this impressive demo where they upgrade the AirPods Pro from 3DoF to 6DoF using data from a navigation system.

As some guys are finding out, this makes it look like nothing is happening when you measure it even though the perceived result is stronger than anything else. Like with speakers that continuously adapt to acoustic variables, for measurement and benchmarking these sophisticated systems, we will need to use totally different approaches. Don't even mention reverse-engineering it :)

For all the manufacturers that have to rely on existing technology to have something close to this, the good news is that companies are being quick to respond.

As highlighted here, Swedish digital audio processing pioneer Dirac announced that its latest spatial audio and speaker optimization solution is now available for direct integration into the digital signal processors (DSP) of Bluetooth wireless headphones. Headphone manufacturers can easily integrate the Dirac solution into chipsets from leading Bluetooth DSP vendors, such as Mediatek, BES Technic, and Qualcomm.

The result is a high precision real-time 3D audio solution for System-on-Chip (SoC) vendors, OEMs and ODMs looking to provide the ultimate hearing experiences and a new generation of motion-aware earbuds where 3D audio enhances the overall user experience. You can read all about it here.

This article was originally published in The Audio Voice newsletter, October 1 2020 (#297). Click here to register to The Audio Voice