Allegedly, our microwave can ‘smartly’ reheat a plate of leftovers to a perfect, consistent temperature all the way through. Yet we usually just press ‘2’ and hope for the best! (More often than not, we either get food that’s still cold or we haashahfahshah through the first steaming bites.)

We’re living in an age where news, music, trivia, TV-series, books, and even meals are readily available where and when we want them. So regardless of what your product does, it has to do it ‘on-demand’ - as anything less than a streamlined, frictionless interface, will limit market acceptance.

This societal shift in expectations, along with a confluence of tech innovations, has lead to the broad adoption of the most intuitive of user interfaces: the human voice. The trouble, however, is that successfully planning and delivering products with a high quality voice experience is notoriously difficult. Here’s what you should know.

Why Voice Interfaces Are So Popular

Voice-enabled devices are quickly becoming commonplace in nearly every region and for every consumer demographic. Whether making cloud-connected or offline products, OEMs need to understand what’s driving this demand for voice. If you ask the average consumer what they like about voice, you might get a simple “it’s just easier”; but a deeper look reveals a few fundamentals behind Voice’s growing popularity:

The Bandwidth Problem. “With your phone, you can answer any question, video conference with anyone anywhere … The only constraint is input/output,” said Elon Musk, co-founder of Neuralink, a startup exploring the possibility of a direct Machine/Brain Interface (MBI). “On a phone, you have two thumbs tapping away - we have a bandwidth problem.”

While it may not be as instantaneous as a hardwired “MBI”, products with a voice-based UI are simply a generation ahead of products with traditional, touch interfaces.

The Translation Problem. In many ways, machines have an ‘interface language’ all their own. We learn their peculiarities and over time are able to fluently do what we want in that language - to the point where, in many cases, this ‘language’ even becomes standardized. For example, if your were handed an unfamiliar TV-remote, how long would it take you to figure out how to “turn it up”? (I’ll put the over-under at 2 seconds.)

The flip-side to this though, is that users are asked to learn a new language if you’re selling a product that’s unique, has high complexity, or is broadly capable. To use these devices, they have to figure out how to translate their intent into a series of device interactions.

Here again, largely thanks to innovations in AI & ML powered speech-to-intent engines, voice-based interfaces allow us completely side-step this translation problem. “Hey microwave, reheat this medium bowl of spaghetti.”

Social Shifts. Wondering what time the game’s on? Just ask! Ready to resume that series on Netflix? Just say the word! Notice something while making dinner? “Add garlic powder to the shopping list”. The convenience of voice is permeating our modern lives.

Many technologies are brought to market but never find purchase in society. Some, like Google Glass (2014) or GM’s EV1 electric vehicle (1996), are perhaps ahead of their time - while others, like the Segway, are more a solution in need of a problem. Unlike these, it’s safe to say that voice is here to stay.

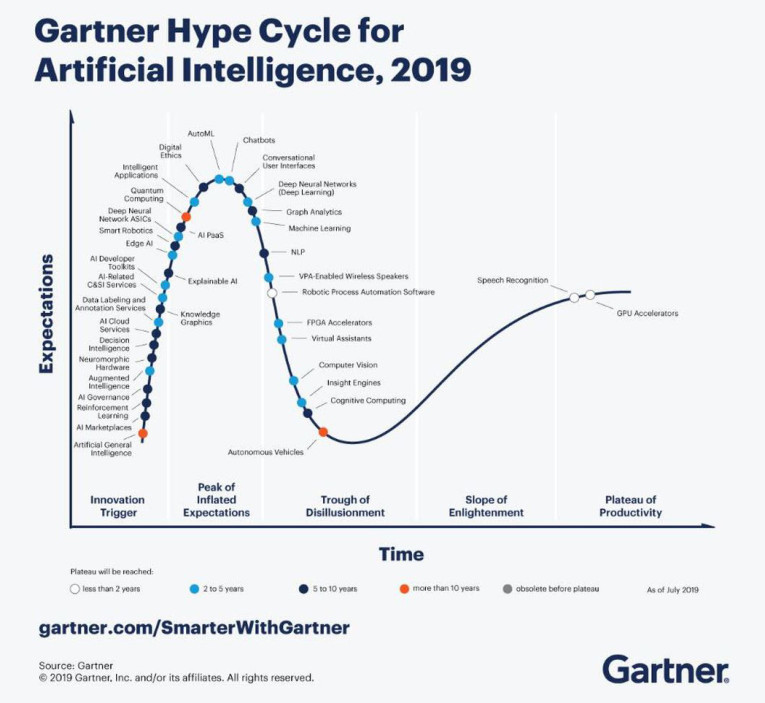

Speech recognition is now reaching the ‘plateau of productivity’ on the ‘hype cycle’, where voice-based services are now fully integrating into modern life. Beyond the obvious functional benefits, the act of talking to a device has also become an expected, socially normal thing to do.

Presumably, if you’re reading this article, you know that next-gen products will need voice control. It’s probably also obvious that it’s a bad idea to release a product with a BAD voice interface. (Nobody wants “this stupid thing” associated with their brand!) Unfortunately, despite the proliferating number of voice-enabled products, delivering a quality voice experience may be harder than you’d expect.

Challenges of Building Voice-Enabled Products

Unlike some new product features, adding a voice interface requires product architects to make a number of highly-impactful, interconnected design decisions. Development teams don’t have it easy either, as integrating microphones in a product introduces another layer of complexity.

Challenge #1: Yet another area of domain-expertise is needed: Acoustics

With most consumer electronics, there’s a fairly standard set of skills that are brought to bear to develop a product:

- Electrical (component selection, circuit board design, power/battery systems, etc)

- Mechanical (internal chassis, materials, general architecture, etc)

- Industrial Design (external materials, look & feel, product packaging, etc)

- Software (drivers, connectivity, application code, software update, etc)

If you want to add a useful voice-interface to that product, you still have to get all that right - but now every one of those areas also needs accommodate another set of requirements: Acoustics. To make matters worse, microphone acoustics are considerably less forgiving than what you can get away with on the playback side!

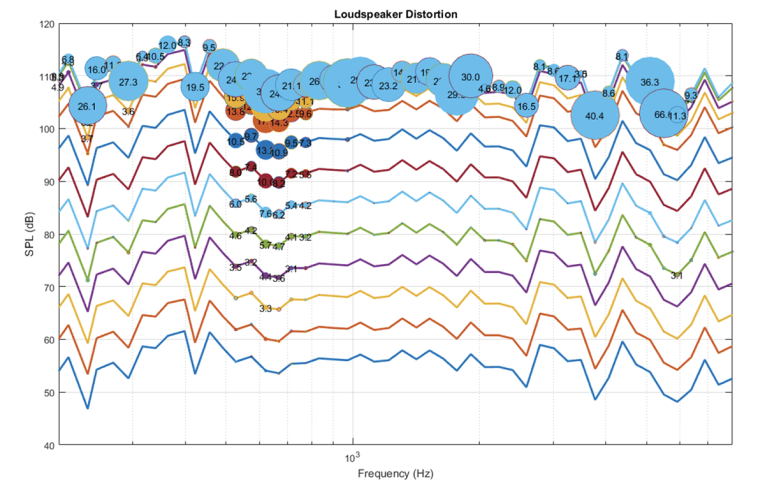

For example, your Bluetooth speaker is unlikely to loose any Amazon stars if there’s a little harmonic distortion around 600Hz - nobody will even hear it! On the other hand, in the world of high performance machine-hearing, even imperceptible problems like this can have costly impacts on performance & perceived quality.

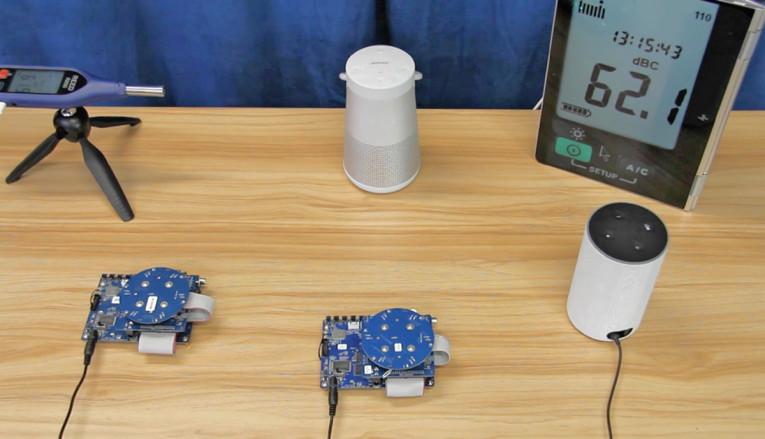

The top three acoustic problems with voice-controlled products

- Improperly Sealed Microphones. Microphones are typically sandwiched between a host circuit board and the product’s exterior shell. Proper gasketing and sealing is required to prevent sounds resonating inside the device from leaking into any of the microphones.

- Mechanically Coupled Microphones. In short, your speakers shouldn’t transmit sound to your microphones through the physical structure of your device. Buffers, bushings, and gaskets can mitigate this to an extent, but the relative placement of microphones and speakers is also critical.

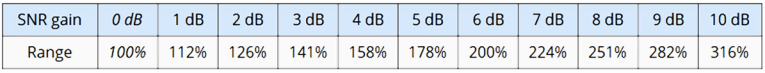

- Speaker Distortion. Acoustic Echo Cancellers (AEC) are what allows products to ignore their own speaker noise. The secret behind this magic trick is that the system gives the AEC a preview of what the speakers are about to play - a “reference signal” - which the AEC then finds and deletes from the mic recordings. However, when speakers distort, they’re not making the sounds the AEC was told to look for - which ultimately means your customers will need to speak more loudly to be heard over the speakers (which aren’t able to totally ignored.)

Challenge #2: Planning and Design Considerations

Most product design decisions are important, but when it comes to adding a voice interface, product architects face a daunting set of interconnected decisions and often conflicting requirements.

“Engineering says a 4 mic-array should be centered on the top of the product. UX says a button cluster should be centered on the top of the product. Marketing says we can only afford 2 mics. What do we do!?”

These sorts of tradeoffs are nothing new to experienced product architects, but the introduction of voice-processing introduces a completely new set of dependencies - and the impacts may not always be obvious.

- Which microphone vendor offers the best value?

- Which class and model of microphone should I choose? Analog? MEMS? Minimum Specs?

- How many mics do I actually need, and which geometry best supports my use cases?

- What even are my use cases?!

- How many revs of hardware should I plan for?

- The speaker in our product is really small and doesn’t play very loud - will distortion really disrupt voice? How do we prevent that?

- How much processing power do I need?

- Which IC should I use?

- How do I support multiple product variants?

- How long will software integration take?

Challenge #3: Delivering voice-enabled products takes time

Your product development team will need to overcome some difficult engineering challenges to execute and deliver on schedule; and much of what separates high-performing teams from their lessers is an ability to quickly root-cause problems, resolve them, and move on.

When your Voice Interface doesn’t work, the first questions are always ‘Why?’ and ‘Whose fault is it?’. Is there a flaw in the acoustics or mic drivers? Is the AFE properly extracting a clean voice signal? Every Wake Word Engine and Speech-to-Intent service performs differently and what might work for one, can produce poor results in another - is yours struggling with a particular accent or command?

Unfortunately, even for highly skilled teams, debugging voice/input systems is notoriously difficult! If there’s a problem in your output system, you’ll have some leads to chase down based on what you hear/measure from the speakers.

On the input side though, you have very little evidence to start with (either it heard me or it didn’t). There are a lot of places in the voice-processing path where something could be wrong, and traditional debug methodologies takes time! Instrumenting audio-code with debug hooks, probing PCBs, etc. Even just verifying that your acoustics are solid takes some time:

- Microphone Matching / Variance

- Microphone Gain / Sensitivity

- Microphone Clipping

- Microphone Noise Floor

- Microphone Frequency Response

- Microphone Sample Rate and Time Alignment

- Microphone Continuity

- Mechanical Isolation

- Acoustical Isolation

- Microphone Drivers / Channel mapping

- Speaker Linearity / THD

- Reference Signal Clipping

- Reference Signal Continuity

- Reference Signal Latency

How Do You Overcome These Challenges?

Include Voice-considerations Early in your Process. When it comes to integrating voice-control into a product, “measure twice, cut once” holds as true as ever. At DSP Concepts, we’ve worked with product teams of all sizes and levels of expertise. Invariably, teams that attempt to add voice/microphones as an after-thought often struggle to hit their schedule and budget targets. To avoid this common pitfall, be sure to include Voice UI considerations from the beginning of your design and decision making processes.

Consider components based on more than just performance. During the execution phases of your product-development process, integration, debug, and final tuning are all time-critical activities that can make or break your schedule. For the best chance of success, choose hardware and software components that not only offer proven performance, but that are also well supported and easy to work with.

Be realistic about your team’s core competencies. If your team is competent with microphone acoustics, be sure to include those domain experts early in the planning process to avoid potentially costly design decisions. If your team is thin on experience with microphones or other voice-related domains, reduce risk by leveraging proven 3rd parties technology partners. Innovation is a team sport, and it’s important to choose technology partners that compliment and empower your current roster of all-stars.

Final Thoughts

Voice User Interfaces offer a streamlined, frictionless user experience, and have quickly become an expected feature by consumers. That rising demand is accompanied by an ever-rising bar for performance and ever-shrinking product development timelines.

To include voice-control in your next product development project, start with the fundamentals: define your use-cases, requirements, and options. Then make well-informed decisions based on industry standard guidelines. Finally, choose platforms and technology partners that compliment your team’s core competencies and that can support your business’ product line and roadmap.

www.dspconcepts.com

About the Author

About the AuthorChin Beckmann holds a B.S. degree in Electrical Engineering from Boston University in 1988 and an MBA from Northeastern University in 1994 with a concentration in Entrepreneurship. She has more than 20 years entrepreneurial experience in audio and DSP engineering and is currently the CEO, co-founder and Board Director of DSP Concepts.