The trend for immersive sound reproduction in cars is obvious, as car manufacturers introduced height speakers and advanced multichannel loudspeaker setups in recent years. Augmented reality brings with it new kinds of applications and therefore new demands on in-car sound systems that are not only used for entertainment purposes but increasingly as a general communication channel between car and occupants. The use of immersive reproduction technologies will have a huge impact on the interface design of the interior of the future.

However, focusing on audio to create new interior experiences comes with major challenges, as various usage scenarios are developed in different specialist departments of automotive manufacturers. In this context, the effort required to create unique audio experiences will take on a significant role, requiring a new unified interface for spatial presentation to limit production efforts. Another key element is interactivity as the concept of connected car requires intense interaction between car devices, traffic participants, infrastructure, and between vehicle and occupants.

Interior of the Future

Vehicle interiors will change more than they have in decades. As more and more of the driver’s tasks are being taken over by intelligent assistance systems the focus shifts away from the road and towards the interior experience. With the new demands on comfort and safety, audio will play a significant role soon. We will discuss the important use cases for audio, which lead to new requirements on the general audio production platform.

Entertainment Audio

The development of in-car sound systems increasingly focuses on creating immersive listening experiences for occupants. However, there is a wide variety of different audio formats on the market, as well as audio content that is available in 2D or 3D. Future in-car sound systems should therefore be able to reproduce all relevant audio formats and all audio content, whether in 2D or 3D, to provide the driver with a consistently high-quality 3D listening experience.

Assisted Audio

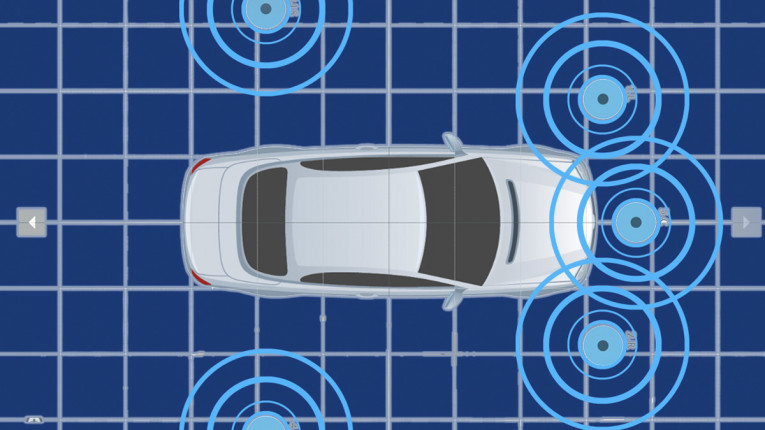

Today’s driver assistance systems often limit their feedback to visual warnings and information, but do not fully exploit the potential of the acoustic channel, even though the ear is on 24-hour reception, is more powerful than the eye and perceives information from all directions. Future driver assistance systems will therefore require an interactive, 360-degree reproduction of acoustic events that are spatially unambiguously placed in and around the vehicle, thus enabling a direct and more intuitive perception of the vehicle’s environment.

Social Audio

Nowadays, telephone calls via the vehicle’s hands-free system are part of everyday life. However, they often impose a high mental load on the driver, especially when communicating with several participants, as word contributions are difficult to distinguish. Particularly in complex traffic situations, this is an additional challenge for the driver. A spatial correct reproduction of multiple phone call participants allows users to trigger the so-called “cocktail party effect.” This significantly reduces the driver’s listening effort, freeing up cognitive resources to focus on essential driving functions.

Smart Interior Audio

Smart surfaces are increasingly conquering car interiors. Control elements only appear when the driver needs them and then disappear again. Smart surfaces must therefore be intuitive to use and provide the driver with clear feedback as to whether the function has been used successfully. As the acoustic feedback has an important function for clear and comprehensible perception, future in-car systems must therefore be able to provide spatially correct acoustic feedback for smart surfaces.

Comfort Audio

Car manufacturers increasingly focus on creating emotional driving experiences. The decisive factor here is that the driving experience can be individually adapted to the driver’s wishes. Sound is of great importance for individual well-being, and in combination with other functions in the vehicle, it can provide an emotional driving experience. One feature of future in-car sound systems should therefore be to link immersive sound events to various functions in the vehicle.

Channel-Based In-Car Sound Systems

The audio concept used in today’s cars follows the channel-based approach. This implies that the spatial information of the audio scene is mixed and saved in loudspeaker signals (channels), valid for a dedicated loudspeaker setup. One of the main disadvantages is that the same loudspeaker arrangement is always required for playback as well as for production, which is impossible to realize in today’s vehicles due to the limited loudspeaker mounting options.

Since this mix is not compatible when the playback setup is different, the spatial mix must be recreated or adapted separately for each speaker setup of a vehicle. Apart from the fact that this is very time consuming, it is far from resulting in the perfect mix as intended by the audio engineer. As different car lines have different speaker setups, and the number of in-car speaker channels increases, the problem will become more acute with new use cases requiring immersive audio. The use of object-based audio as a platform technology can overcome these limitations.

Object-Based Audio

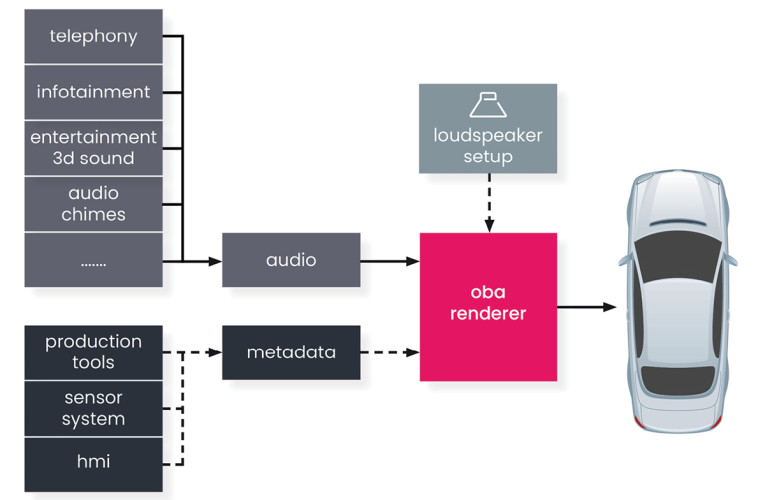

Object-based audio (OBA) is a concept for storage, transmission, and reproduction of audio content. An audio object can be seen as a virtual sound source that can interactively be placed in space. The arrangement of all audio objects and their metadata is called an audio scene. Instead of mixing dedicated loudspeaker signals separately, object-based audio scenes are independent of the loudspeaker setup and therefore content only needs to be produced once and can be rendered on any setup. An object is defined by an audio signal and corresponding metadata. The metadata stream contains the properties of all audio objects, such as position, type, or gain. The process of calculating reproduction signals is termed rendering. This is depicted in Figure 1.

The audio renderer is a piece of software that uses the loudspeaker coordinates of the current speaker setup to calculate the loudspeaker signals from the individual input audio signals in real time. This calculation is based on mathematical, physical, or perceptual models and controlled by the metadata of the spatial audio scene. The audio input stream can be provided by any multichannel audio player. In contrast to channel-based audio production, an object-based production does not result in ready-mixed loudspeaker signals. Instead, an audio signal and a set of corresponding metadata for each audio object are stored.

The integration of object-based audio into today’s car loudspeaker system is easy, as no special loudspeakers setups are needed. Minimal setups can consist of only a few speakers, distributed in 2D up to multichannel setups including height speakers. The commissioning of an object-based vehicle playback system is not significantly different from the previous way of working. Since the object-based renderer takes over the spatial mapping of the audio scene, there is no need for time-consuming tuning processes in the form of manual adjustment of delay and gain values. Nevertheless, separate tools are available for tuning, which intuitively allow adjusting the audio objects or an extended rendering configuration.

Production Workflow

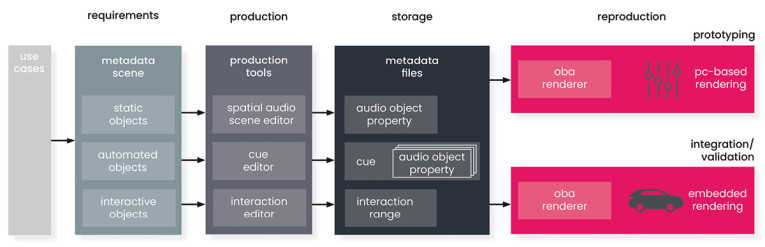

To use the object-based audio approach as a platform technology in vehicles, the workflow depicted in Figure 2 needs to be supported. For this, Fraunhofer IDMT provides a full production and reproduction software suite.

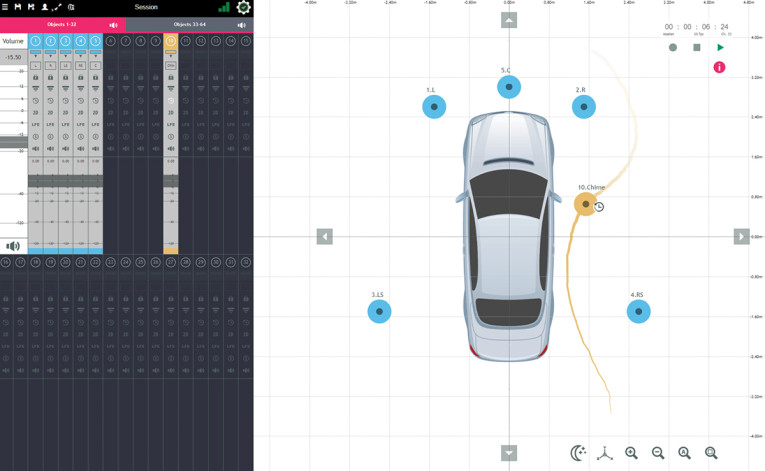

To arrange audio objects in the form of audio scenes, the spatial audio scene editor can be used, which is shown in Figure 3. The user interface is divided into two interaction areas. While on the left side a large part of the properties of the audio objects can be set, the right area is used for positioning the audio objects. The audio objects are represented by colored circles.

Depending on the use case static objects, automated objects, and interactive objects represent the range of basic ways to control virtual audio sources. If the audio objects are to be static — their properties do not change over time — this can be realized in a simple way by placing them in the desired location and setting the attributes accordingly. An example in the field of Immersive Audio use cases is the reproduction of channel-based audio data on arbitrary loudspeaker setups. This can be implemented by using static audio objects as virtual speakers at standardized positions. In Figure 3, this is illustrated by the five blue audio objects whose placement represents a virtual speaker setup.

Other use cases require pre-produced positions and property changes of the audio objects over a given time. This can be realized using cues. A cue is a value sequence that is started by means of a trigger (e.g., event or pressing a button) and can consist of stored metadata and actions over a defined period. An example is a welcome chime that is moving around the occupants as shown in Figure 3 with the yellow audio object. In this case, the metadata of the audio objects are captured in a time-dependent manner by a freehand creation of the properties.

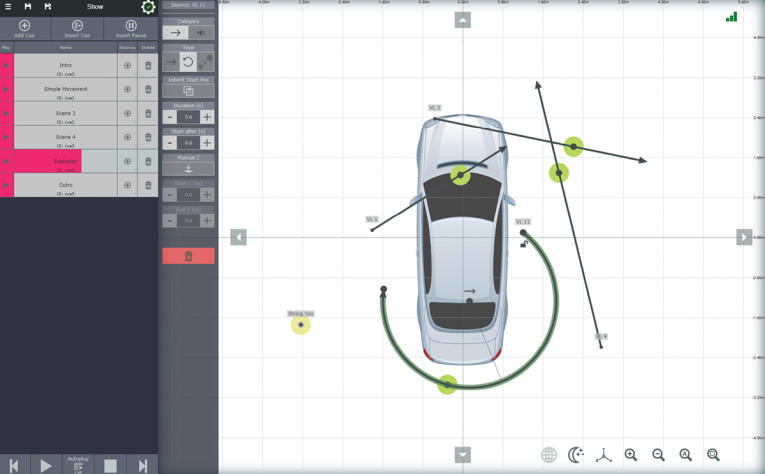

In contrast to the freehand source editing, Figure 4 shows a mode for defining object properties based on linear or circular sequences of values. This approach is useful when audio objects are to be presented in a template-like manner. The parametric definition of predefined motion paths and value curves enables the producer to create complex audio scenes in a very simple and fast way and gives the possibility to reuse or modify the results. The circle and the three lines in Figure 4 show the predefined movement patterns of four audio objects.

In case the audio objects need to be controlled interactively, the interaction editor can be used to ensure that all interactive properties are restricted to sensible ranges. So, movements may be limited to some volume outside the vehicle, and gains may not exceed predefined limits. An example is a sound to draw attention to the specific position of vulnerable road users. In such a scenario, the sensor system for environmental monitoring is detecting the position of a weak road user. This data can be used to map an audio signal to the position of the event in the real world. The sensor module thus can trigger the audio playback and at the same time the virtual source moves to the required position. A translation unit can be used to apply perceptually correcting operations like translation and rotation to the incoming data, or to simply transform the data into the metadata format, that is understood by the rendering unit. This is depicted in Figure 5.

Prototyping, Integration, and Validation

All metadata files containing the spatial audio scenes are saved in the metadata storage, which acts as an interface between production and reproduction. On reproduction site the metadata storage is responsible for providing the created control data and definitions to the object-based renderer when needed. The renderer receives the interaction parameters and — depending on the type and content of the parameters — requests and executes the corresponding metadata actions. A distinction can be made between PC-based prototyping and the actual integration into an embedded platform. PC-based rendering can be used in a studio for the design of the audio content as well as the production of its spatial mapping. Here the renderer is designed to be used with any digital audio workstation, so that the sound designer can use all favorite tools.

While PC-based rendering is used for production in a studio or testing of the created content, the integration step must be performed on the vehicle hardware for validation under real conditions. The data basis is identical for both use cases and the result is therefore suitable for comparison, when using metadata scaling concepts. As can be seen in Figure 6, the transfer and comparison between studio production and in-vehicle playback is possible in this innovative way, because with the existing modules the workflow is greatly simplified, and the time required is reduced enormously.

Embedded Rendering

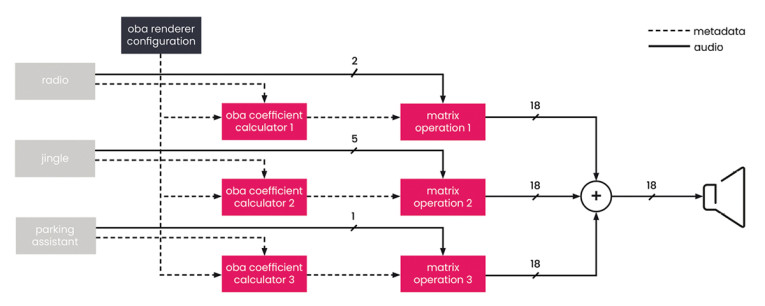

For the rendering on embedded automotive devices, several implementations are available. This also includes software modules for DSP Concepts’ AudioWeaver. The core of the object-based rendering system is the OBA Coefficient Calculator. It contains the model to calculate rendering coefficients using the metadata stream. As the rendering coefficients contain the information on how the audio input of corresponding audio objects will be transformed into output (loudspeaker) channels, they will be applied by using signal processing matrices with the size of N × M where N is the number of audio objects and M the number of output channels. While the matrix operation must be carried out in real time, the coefficient calculation only needs to be active, whenever a source object changes one or more of its properties.

To provide maximum flexibility while conserving available computing resources, the object-based rendering can be divided into several separate units. This allows different departments to be assigned their own rendering units and thus act independently of each other. The parallelization of the processes thus increases the speed of implementation. Each unit is configured using the same data and the results of all units are mixed into the final loudspeaker signals. Figure 7 shows an example of such a system.

Availability

Using the object-based audio approach in vehicles will revolutionize the way audio is controlled for in-car sound systems. The technology is available as a software bundle supporting the full development process. This includes real-time implementations for development and series production, graphical authoring tools to create object-based scenes as well as tuning tools for the sound setup. The technology was developed by Fraunhofer IDMT. To support customers’ value chains in the best possible way, the technology will be made available soon through the company Sense One. Clicking on the QR codes for Fraunhofer IDMT and sense one will provide you with more information. aX

This article was originally published in audioXpress, September 2022.

About the Author

About the AuthorChristoph Sladeczek heads the Virtual Acoustics research group at Fraunhofer IDMT. His research interests include the investigation and development of innovative sound reproduction methods as well as real-time audio signal processing algorithms. He is author and co-author of several scientific papers and textbook chapters. Christoph is an active member of the German Society for Acoustics (DEGA) as well as a member of the Academic Board DACH of the SAE Institute. His activities include reviewer activities for the Audio Engineering Society (AES) as well as public project proposals.