Increasingly used for voice communications on the move, consumers expect hearable devices to work in noisy public environments (e.g., their workplace, shopping malls, and on public transportation) in addition to remote and home environments. As a result, hearables are expected to provide the portability and convenience that consumers demand from their electronic and smart devices; however, the technology faces limitations. Most voice-enabled hearables on the market require a smartphone app or Wi-Fi connectivity to operate and existing offline command sets are relatively small with global adoption hampered by accent or language limitations of the technology.

The work-from-home phenomenon, easing pandemic, and growing consumption of mobile games and streaming with the adoption of 5G is expediting growth of the market, and the evolution of true wireless stereo (TWS) technology is leading the charge in advancements to meet these consumer demands. With this in mind, every audio and technology company will be paying serious attention to the hearables market in the years to come.

TWS is a term used to describe any technology that offers a way to listen to high-quality stereophonic sound wirelessly and Bluetooth technology is typically used for wireless transmission of sound in TWS devices. TWS earphones have developed rapidly since their introduction in 2015 and took off in mainstream consumer markets when Apple introduced the AirPods in 2016.

The global hearables market size was valued at $21.20 billion in 2018, and Allied Market Research projects it to reach $93.90 billion by 2026, growing at a compound annual growth rate (CAGR) of 17.2% from 2019 to 2026. According to Strategy Analytics, overall adoption of TWS headsets alone will grow 54% year-over-year in 2022.

The potential of TWS has not yet been fully realized and innovation in several key technology areas, including Edge AI, ensures that the growth and evolution of these devices will continue.

Limitations of Current Hearables on the Market

The term “hearables” was first coined by technology analyst Nick Nunn in a 2014 blog post in which he declared the ear as “the new wrist” for wearable technology. At the time, in-ear devices capable of interacting with smartphones or other technology had yet to dominate the market. Hearable devices now have the ability to provide enhanced listening experiences; however, the future of hearable technology is just beginning to emerge.

Advancements in hearable technology are challenged by the need for small footprint software. Hearables need to integrate a speaker, a microphone, and sensors, meaning only small audio integrated circuits (ICs) will fit in the device. Despite massive improvements in microchip and sensor technology, embedding various technologies into smaller earbuds remains challenging, and the performance of cloud-dependent hearable devices is limited by battery life.

Hearables currently on the market do not have a truly flexible voice control function. Users require connection to their smartphone voice assistant or a corresponding app for operations or need to tap the devices to carry out commands.

The current technology used in hearables is further restricted by certain languages and has difficulty understanding accents and speech impediments. Usage of the voice command function is also impacted by noisy environments, negatively impacting the user experience.

Consumers increasingly expect smart devices to operate with low latency, be energy efficient, and deliver touch-free experiences.

With all of these limitations to overcome, the hearable market has yet to offer the smart technology consumers have come to expect from their devices.

With the Internet of Things (IoT), hearables can do more than amplify sound, and thanks to advances in Edge AI and tiny machine learning (TinyML), it’s possible to embed the most advanced technology into the smallest of devices (e.g., earbuds).

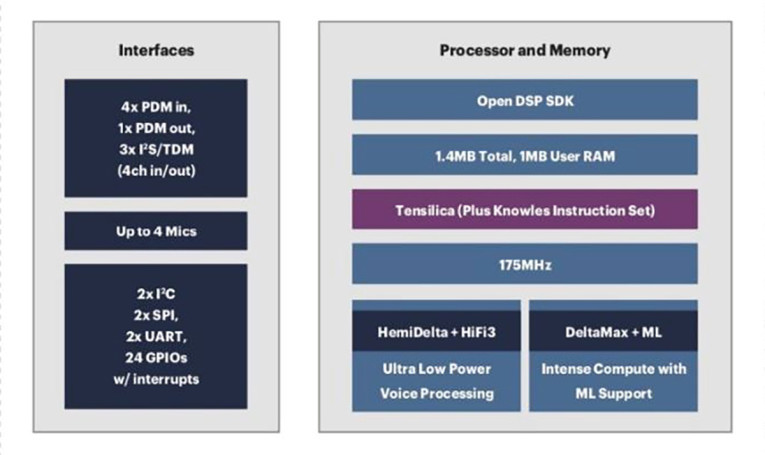

Knowles Corp., a market leader and global provider of advanced micro-acoustic microphones, speakers, and audio processing, has developed a TWS development kit that enables TWS manufacturers to embed and test advanced audio features. The kit includes the Knowles AISonic Audio Edge Processor IA8201 that is a high-performance, ultra-low-power audio-centric DSP supporting up to four mics and up to 1MB of usable memory, enabling device manufacturers to offer superior algorithms and features. When used in wireless earbuds, the IA8201 processor delivers low-power premium wake-on-voice performance and advanced local commands in addition to a host of front-end audio algorithms for enhanced voice quality (e.g., beam forming, bone conduction sensor fusion, and noise reduction).

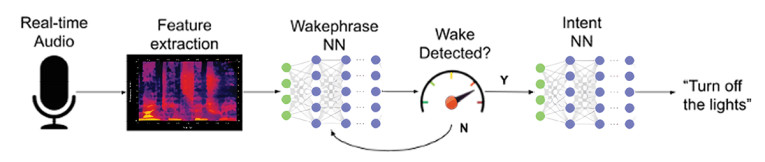

Developing the most advanced embedded speech recognition technology available, Fluent.ai is an emerging leader in the voice recognition space. The company’s unique speech-to-intent model directly maps a user’s speech to their desired action without the need for speech-to-text transcription or a cloud connection. The technology is designed to run fully offline in small footprint, low-power devices and can support any language or accent, providing an intuitive voice user interface for any device or application.

The two companies recently came together to usher in the future of voice-controlled hearables, delivering fully offline and app-free AI-powered voice control for TWS earbuds and other hearable products.

Fluent.ai’s patented speech-to-intent technology leverages natural and flexible voice commands to trigger essential TWS actions, including activating noise cancellation, controlling music, accepting or declining calls, and checking battery level, without the need for an accompanying smartphone app, cloud connectivity, or physical tapping of earbuds. The technology resides locally on Knowles AISonic Audio Edge Processor IA8201—which does not use data from the cloud and offers state-of-the art noise cancellation capabilities—and the technology works entirely offline without the need for a Wi-Fi connection.

Moreover, the multilingual and accent agnostic voice recognition technology offers flexible voice commands for a better user experience anytime, anywhere. Fluent.ai’s software also offers one of the largest command sets for TWS devices on the market.

Future of Voice Recognition Technology and Smart Devices

Developers designing voice recognition technology that empowers product makers to deliver noise-robust systems with multilingual support, low latency, and flexible command sets, while reducing costs and shortening the time to market are at the leading edge of future advancements in the hearable, smart device, and voice assistant spaces.

Technologies, such as those mentioned in this article, point toward a future where AI devices will no longer need to depend on an Internet connection, offering consumers the privacy they demand while enhancing the functionality of their smart devices. Aside from the appeal of a more natural interaction with hearables and voice assistants, embedded voice recognition technology demonstrates real-world benefits that incentivize wider adoption. With the processing being performed locally, devices utilizing Edge AI software can be deployed with fewer geographical hurdles as there is no requirement for network infrastructure and language and accent agnostic solutions allow for a truly global adoption. aX

This article was originally published in audioXpress, April 2022.

About the Author

About the AuthorProbal Lala, CEO of Fluent.ai, is a telecom executive turned serial technology entrepreneur and investor with more than 30 years of broad tech leadership experience building high-impact organizations and growing bottom line results. He has been an active Angel Investor for the past 14 years, most recently serving as Chair of the Maple Leaf Angels Capital Corp. Prior to this position, Probal has held a number of executive leadership positions, including being the CEO of About Communications, a national provider of phone and internet services to Canadian businesses; Vice President of Sales at Alcatel-Lucent Canada; General Manager and CTO at Stentor Services, Inc.; and Vice President and General Manager of Internet Operations at Bell Canada.