In 2017, the Society of Motion Picture and Television Engineers (SMPTE) approved the first set of standards documents for SMPTE ST 2110, a set of standards for interoperable IP media transport in broadcast live production. This included SMPTE ST 2110-30, a specification for uncompressed audio transport that essentially started with AES67 and then added a few constraints and changes to better match particular requirements of audio in the broadcast production context.

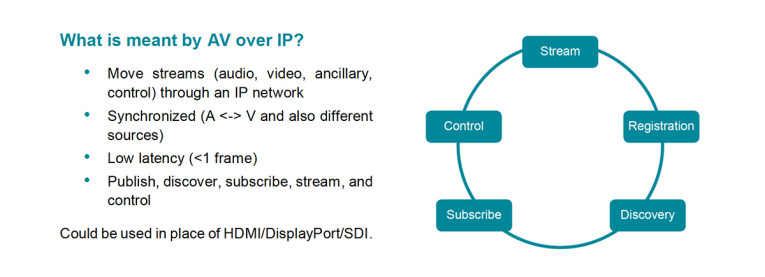

Fast-forward to 2019 when AIMS launched IPMX (Internet Protocol Media Experience), which is the name for a collection of standards and specifications organized within profiles; the first of which is for Pro AV. For the most part, SMPTE ST 2110-30 is to AES67 as IPMX is to SMPTE ST 2110. For video, IPMX added requirements to support RGB 4:4:4 video and wide senders (to ease software implementations), and extensions for EDID and HDCP. However, what may have a greater future impact are the new modes of operation that are designed to support asynchronous video and audio sources.

In live production, synchronous sources greatly simplify the system operation and increase performance. However, in Pro AV, synchronous sources are not the norm. When adapting asynchronous sources, such as laptops or any other HDMI/DisplayPort source, to a system that requires synchronous operation, such as SMPTE ST 2110 and AES67, a time base corrector (TBC) is required. For video, this device will typically add at least a frame of latency and occasionally repeat or skip frames to retime the content. For audio, it adds increased latency and resampling results. The skipping or repeating of frames and resampled audio can potentially degrade quality while the added latency degrades the experience for anyone using a mouse or otherwise attempting to interact in real-time to what is being shown on the screen and heard through the speakers.

In most workflows outside of live production, it is better to eliminate this processing and instead keep the timing from the original source, especially when audio and video are originating from the same media clock. The trade off? When sources are asynchronous, seamless switching becomes impossible, as receivers need to recover timing from the new stream’s media clock.

To support high-performance asynchronous systems and to aid with clock recovery, IPMX re-introduces RTCP sender reports by requiring that all senders MUST include them, whereas AES 67 stated that senders SHOULD include them, and SMPTE ST 2110 required only that receivers tolerate their existence. The RTCP Sender Report contains an RTP Timestamp that is synchronous to the media clock and the PTP time that corresponds to that RTP timestamp at the IPMX Sender. This allows the IPMX receiver to correlate the RTP timestamp to PTP time, allowing for precise alignment of media streams and quick recovery when switching between asynchronous sources. When PTP is absent, precise alignment between multiple source devices is not possible, but an internal reference clock can be used instead of PTP for clock recovery at the receiver.

Does this mean that IPMX doesn’t really need PTP? No! Like SMPTE ST 2110 and AES67, IPMX requires that PTP is used, when it’s available. When sources can be synchronized with PTP, IPMX takes advantage of that to achieve the precise source alignment, low latency and seamless switching performance of SMPTE ST 2110. Even when there is a mix of synchronous and asynchronous devices, IPMX gives system controllers and applications the information needed to reason about relative sample rates between sources so that efficient processing can be achieved.

By taking advantage of the professional audio and broadcast roots of AES67 and ST 2110, IPMX gains the benefits of a protocol designed for professionals, while moving into areas where high-performance and plug-and-play reign supreme.

About the Author

Andrew Starks is director of product management for Macnica and is a board member and marketing workgroup chair at AIMS. He leads the company’s standards efforts and has been a significant contributor to AIMS’s Pro AV initiative, including the development of the IPMX roadmap, and its marketing plan.

About the Alliance for IP Media Solutions

The Alliance for IP Media Solutions (AIMS) is a not-for-profit organization dedicated to the education, awareness, and promotion of industry standards for the transmission of video, audio, and ancillary information over an IP infrastructure, as well as products based on those standards. The group represents the interests of broadcast and Pro AV companies and technology suppliers that share a commitment to facilitating the industry's transition from baseband to IP through industry standards and interoperable solutions that enable the rapid evolution to open, agile, and versatile production environments.

Resources

More information about standards and open specifications is available at:

AIMS: aimsalliance.org/educational-library

IPMX: ipmx.io

AES: www.aes.org/standards/blog

AMWA: github.com/AMWA-TV/nmos

EBU: tech.ebu.ch

SMPTE ST 2110: www.smpte.org/smpte-st-2110-faq

VSF Technical Recommendations: videoservicesforum.org/technical_recommendations.shtml

This article was originally published in The Audio Voice newsletter, (#395), October 20, 2022.