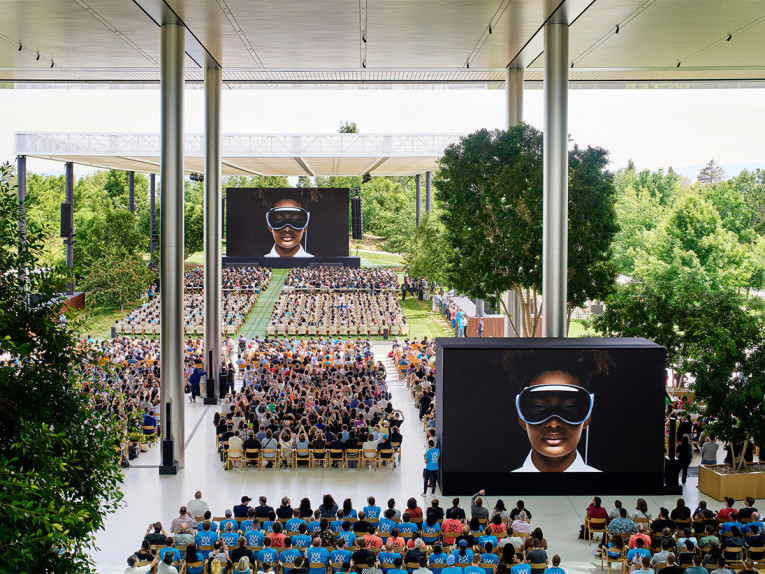

Normally, the WWDC annual events allow a lot more insight about the incredible technologies that Apple develops, always leveraging the company's ability to master hardware, silicon, product design, application software, firmware, and operating systems. And this year, there were more innovations than ever and a lot more hardware than ever - something that Apple traditionally reserves for other dedicated events throughout the year. But this WWDC 2023 Apple had multiple goals, starting with the important conclusion of the Mac transition to Apple Silicon, and obviously because of the Apple Vision Pro spatial computing platform unveiling. Also, there were loads of interesting audio announcements contained within each operating system update or product announcement.

And I'll start precisely with the new Apple Vision Pro - a completely new platform that is unlike anything that the company presented. A product that allows Apple to enter a space that is not obvious at all what will be the key use case - but we've seen that before (the more recent iPad and Apple Watch come to mind). More importantly, Vision Pro creates a new platform that shows what is possible with the most advanced technology at this point - even if that's nearly not enough, and if the use case clearly is not convincing at all.

Apple is clearly not going after the "virtual reality" or the "metaverse" hype, and designed a system that will appeal immediately to all the 3D stereoscopic enthusiasts. There are not many, as we learned from the flop of 3D TV sales, but at least that crowd will have a way to finally enjoy all those forgotten 3D movies. The new concept will also expand the augmented reality and mixed reality tools that Apple has been building consistently in the Mac, iPad, and even the iPhone frameworks.

The only convincing reason to create Vision Pro is that the price for Apple not being in that space was just too big. Because it's important to keep a technology lead - where innovation lies - while keeping up with market perceptions. Much like it does with artificial intelligence - a technology that Apple applies profusely in all its developments and even empowers with its Mac computer range, as it clearly stated at WWDC 2023.

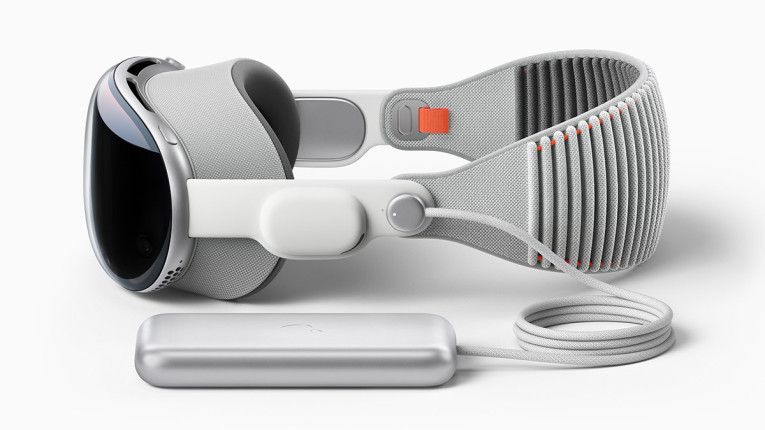

The Vision Pro goggles are an extraordinary feat of engineering, involving more than 5000 patents (half of which will be quickly invalidated by judges when Google or Xiximi clone the features), advanced materials, and countless design firsts, such as the gesture interaction interface, or the introduction - in collaboration with Zeiss - of "Optical Inserts" for people who require vision correction, so they can fully enjoy the incredible experience offered by the Vision Pro. In many ways, countless of the innovations motivated by making the Vision Pro platform a reality will easily find applications in other Apple products (and very likely already have).

As Apple CEO Tim Cook says, "Built upon decades of Apple innovation, Vision Pro is years ahead and unlike anything created before — with a revolutionary new input system and thousands of groundbreaking innovations. It unlocks incredible experiences for our users and exciting new opportunities for our developers."

On that note, Vision Pro is essentially a head-mounted structure that sits above the ears, leaving users completely aware of sounds in their surrounding environment. Not isolating the user completely is an essential part of the product’s philosophy, more oriented toward augmented reality and mixed reality than virtual reality. Also, because Vision Pro shows a platform that is also intended to be used for work, allowing the user to interact with other systems and devices, such as a laptop.

Obviously, Apple also pitches Vision Pro for entertainment applications, including gaming. Complementing the two ultra-high-resolution displays, Apple says that Vision Pro "can transform any space into a personal movie theater with a screen that feels 100 feet wide and an advanced Spatial Audio system." This is intriguing, because to watch a movie or a sports event, as Apple shows, sound is an essential dimension. Apple does show a sequence of a user in a plane wearing Vision Pro and AirPods Pro to enjoy a movie - we assume because the AirPods Pro add the noise cancellation feature to complement the required fully immersive experience.

Apple even mentions that Vision Pro features "Apple’s first three-dimensional camera," and adds that the product "lets users capture, relive, and immerse themselves in favorite memories with Spatial Audio," although no more details were offered about sound capture. We only know there are six microphones, but we also need to assume any "spatial audio" content is not generated with Vision Pro.

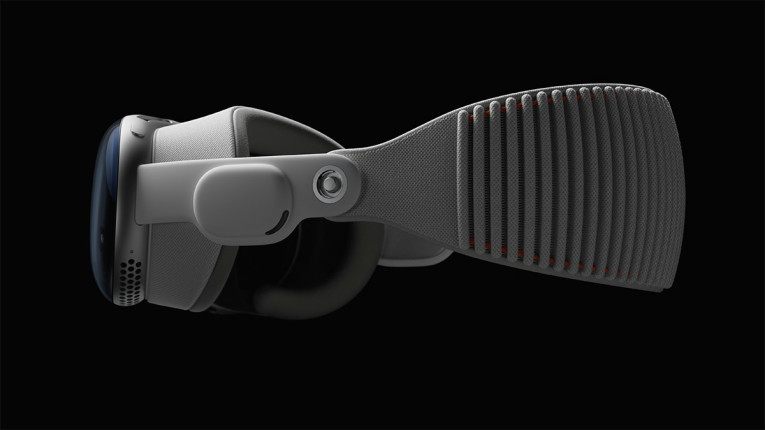

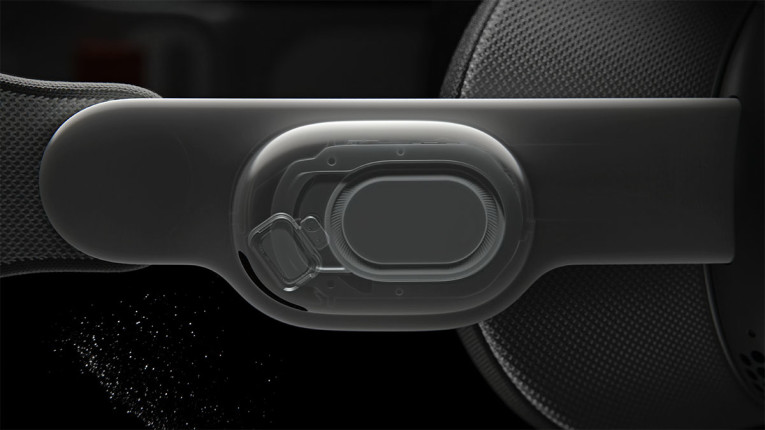

The two transducer areas, which Mike Rockwell, VP Technology Development Group, calls dual "audio pods" use two transducers per side, as Apple's x-ray video reveals. The larger unit is a thin diaphragm - similar but bigger than a laptop speaker - while the second, positioned in an angle closer to the ear, is a much smaller high-frequency unit, strategically close to the only opening vent that projects sound to the ears. Since the transducers are positioned on the outside of the flexible headband and not pressured against the user's head, we can discard the use of bone conduction or any haptic transducer in that area. Therefore, the "mind-boggling audio fidelity" is completely originated by an audio system that "hangs" above the ears. This is an important breakthrough in headset design, and likely requires a very sophisticated processing system to generate a convincing sound field directed to the user's ears and not simply project sound to the outside world - although spilling will be inevitable, which also helps explain the need to use AirPods when close to other people, like on a plane.

That was obviously part of Apple's intention to not block the ears, and generate a sort of "ambient" sound that helps support the mixed reality experience - such as allowing other people to interact with someone wearing a Vision Pro. As an example, when the user takes a FaceTime call, the experience will be augmented with Spatial Audio, "so it sounds as if participants are speaking right from where they are positioned."

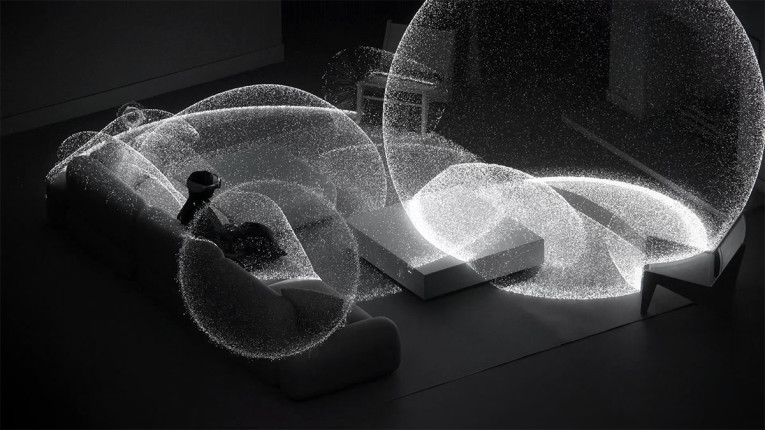

In the presentation, Mike Rockwell describes the audio experience as "ambience spatial audio" to create the illusion that "sounds are coming from all around us." And this obviously requires a combination of powerful algorithms to adjust the audio to the many possible environments, content, and experiences. Including using room sensing. "Beyond personalizing sound to each user, VisionPro matches the sound to the room, by analyzing the features and materials of your space, using a technique called audio ray-tracing, precisely matching sound to the room," Rockwell says in the presentation.

All these features are powered by Apple silicon in a unique dual-chip design - an M2 processor complemented with a new R1 chip that processes inputs from the 12 cameras, five sensors, and six microphones in real time. Anyway, we will not be able to learn much more about the audio experience until we can test one. And apparently that will be next year, when the US $3,499 Apple Vision Pro goes on sale. For more information about Vision Pro click on any of the images.

As one expects from any WWDC event, Apple delivered on the operating system updates, introducing more of what is important for users and developers - and then some. These OS updates are available immediately to developers, and a public beta will be available in July, with general availability scheduled for October 2023.

Starting with iOS 17, Apple made the iPhone more intuitive and practical, introducing major updates to its communications apps (Phone, FaceTime, Messages), easier sharing with AirDrop, more intelligent text input, Live Voicemail, improved voice transcription, and much more. Siri can now be activated by simply saying “Siri.” No more "Hey Siri." Once activated, users can issue multiple commands in succession without needing to reactivate the assistant.

Accessibility updates include Assistive Access, a customizable interface that helps users with cognitive disabilities use an iPhone with greater ease and independence; Live Speech, which gives nonspeaking users the option to type and have their words spoken in person, or on phone and FaceTime calls; Personal Voice, which gives users at risk of speech loss the option to create a voice that sounds like theirs; and Point and Speak, which helps users who are blind or have low vision read text on physical objects by pointing.

On the audio front, iOS 17 is a major release that introduces the ability to see real-time transcription as someone leaves a voicemail, and the opportunity to pick up while the caller is leaving their message. With the power of the built-in Neural Engine, Live Voicemail transcription is handled on-device and remains entirely private. FaceTime now supports audio and video messages so when users call someone who is not available, they can share a message that can be seen/heard later.

In an exciting additional update to Apple TV 4K, FaceTime now extends to the TV, the biggest screen in the home. Powered by Continuity Camera, users can initiate a video call directly from Apple TV, or start the call on iPhone and then hand it off to Apple TV, to see friends and family on their television. With Center Stage supported by the very high-resolution iPhone camera, users will have perfect framing even as they move around the room.

Updates in AirDrop now include the possibility for users to share a file with a colleague or send photos to a friend in seconds, by simply bringing iPhones together, or by bringing an iPhone and Apple Watch together. This also includes the possibility to share a contact card (including personal photo) with NameDrop. These features work with AirDrop and work by simply bringing two iPhone devices together. With the same gesture, users can also share content or start SharePlay to listen to music, watch a movie, or play a game while in close proximity between iPhone devices.

Apple Music will also be updated with Collaborative Playlists to enable listening to music with friends and colleagues, while SharePlay in the car allows all passengers to easily contribute to what’s playing. Listeners can control the music from their own devices, even if they don’t have an Apple Music subscription. Sharing content using AirPlay is even easier with on-device intelligence now learning a user’s preferences. AirPlay will soon also work with supported televisions in select hotels, allowing users to easily enjoy content on the TV when traveling.

On the Apple Watch front, watchOS 10 introduces support for Mobile Device Management (MDM), enabling enterprise customers to remotely and centrally install apps and configure accounts on a fleet of devices, with features such as passcode enforcement, and configuring Wi-Fi and VPN settings. Users can now initiate playback of a FaceTime video message and view it directly on Apple Watch. Additionally, Group FaceTime audio is now supported on Apple Watch.

Arriving in fall 2023, the new macOS Sonoma adds more powerful features and productivity tools that will be highly appreciated, including dynamic video conferencing features such as Presenter Overlay, to elevate the presence of users when showcasing their work during video calls by including them on top of the content they’re sharing.

Designed to enable hybrid in-studio and remote pro workflows, macOS Sonoma brings a new high-performance mode to the Screen Sharing app, which leverages the advanced media engine in Apple silicon to deliver incredibly responsive remote access, including low-latency audio, high frame rates, and support for reference color.

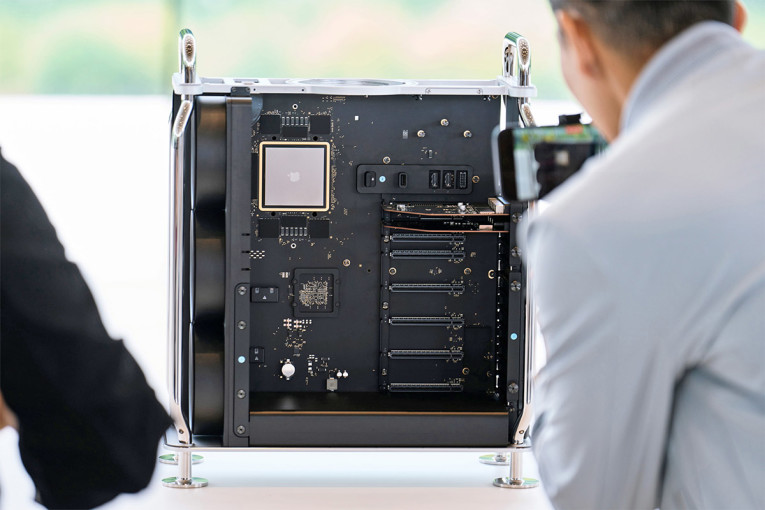

On the hardware front, Apple basically listened to its users and delivered updates to Mac Studio and Mac Pro, boosting the already impressive specifications of those professional machines, and completing the transition to Apple Silicon. Mac Studio and Mac Pro deliver breakthrough performance and productivity, support powerful gaming features like MetalFX Upscaling to help accelerate gameplay graphic performance.

Available in June 2023, Mac Studio can now be ordered with the new M2 Max and M2 Ultra processor, while the Mac Pro also receives M2 Ultra, combining Apple’s most powerful chip with the versatility of PCIe expansion. The 24-core CPU of M2 Ultra consists of 16 next-generation high-performance cores and eight next-generation high-efficiency cores. The M2 Ultra chip integrates a 32-core Neural Engine, delivering 31.6 trillion operations per second, able to train massive machine learning workloads in a single system that the most powerful discrete GPUs can’t even process.

The modular Mac Pro is now up to 3x faster than the previous-generation Intel-based model and features up to 192GB of unified memory. As Apple highlights, every Mac Pro has the performance of not just one, but seven built-in Afterburner cards. The powerful media engine has twice the capabilities of M2 Max, further accelerating video processing. It has dedicated, hardware-enabled H.264, HEVC, and ProRes encode and decode. The display engine supports up to six Pro Display XDRs, driving more than 100 million pixels.

In the presentation, Apple showed a Mac Studio processing 22 8K ProRes video channels in real time, and Apple says the new Mac Pro enables ingesting 24 4K camera feeds and encode them to ProRes in real time, all on a single machine. Obviously, the Mac Pro needs to be equipped with six PCle gen 4 video I/O cards - which it supports. And all the new Apple machines already come with Bluetooth 5.3, Wi-Fi 6E, and multiple Thunderbolt/USB 4 ports. Like Apple did first in the MacBooks, all headphone jacks are now able to detect and improve the use of high-impedance headphones.

Apple also announced an update to AirPods Pro (2nd generation), which will receive powerful new features through a free firmware update, including Adaptive Audio, Personalized Volume, and Conversation Awareness. These software updates will also arrive in fall 2023, when the entire AirPods lineup gains new and improved features to make calls and Automatic Switching between source devices even more seamless.

The AirPods Pro (2nd generation) become easier to use across environments and interactions with Adaptive Audio. When they were introduced, the AirPods Pro advanced Active Noise Cancellation (ANC) and Transparency features established an efficiency threshold that all audio manufacturers have struggled to emulate, let alone match. And the reason was that most manufacturers have been focusing on active noise cancellation methods and have not evolved the sound passthrough features in the same way - and the integration and interaction between both modes. The combination enables the AirPods Pro (2nd generation) to offer a balanced mix of environmental noise reduction and comfortable everyday listening.

The AirPods Pro also can detect and cancel any extreme loud noises for hearing protection, even while in Transparency mode. The announced updates take that approach to the next level with Adaptive Audio, a new listening mode that dynamically blends Transparency mode and ANC together based on the conditions of a user’s environment to deliver the best experience in the moment. By leveraging the built-in H2 processor, the new listening mode tailors the noise control while users move between environments and interactions that are constantly changing throughout the day.

Additionally, moving between Apple devices with AirPods gets even easier with updates to Automatic Switching. Unlike Bluetooth multipoint, which most other brands only now have started to implement with Bluetooth 5 source and sink devices, Apple has always supported audio sharing between two sink devices (two AirPod users listening to the same stream from one iPhone, as an example) or switching between a call on the iPhone and listening to music on the Mac. By leveraging peer-to-peer AirPlay in combination with Bluetooth, the connection time between a user’s Apple devices is significantly faster and more reliable, making it more seamless to move from a favorite podcast on iPhone to a work call on a Mac.

For added convenience, using AirPods on calls is enhanced with a new Mute or Unmute feature across AirPods Pro (1st and 2nd generations), AirPods (3rd generation), and AirPods Max. Users can press the stem — or the Digital Crown on AirPods Max — to quickly mute or unmute themselves, so multitasking is effortless. The AirPods developer beta is available now for Apple Developer Program members at developer.apple.com.

This article was originally published in The Audio Voice newsletter, (#425), June 8, 2023.