Patterns of work and life have changed a lot over the last decades, especially the way we communicate. Digital real-time communication services had become essential working tools for meetings with remote participants long before the coronavirus emerged. The majority of these services — including most telephony today — are based on the Low-Delay IP-Streaming System Internet Protocol (IP). In particular, Voice-over-IP (VoIP) applications continue to develop in their scope and popularity, bringing flexible and highly cost-effective communications to both business and personal users.

Evolution of Audio Quality in Communication

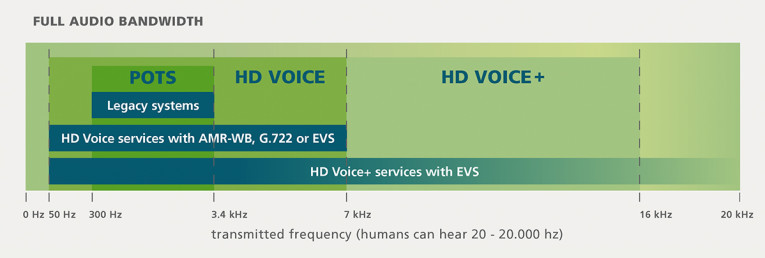

Let us first take a look at the origin of the teleconference: phone calls. For the longest time, the vast majority sounded quite muffled compared to other sources of audio. This was due to plain old telephone systems (POTS) providing only narrow band (NB) audio signals, meaning frequencies of up to 3.4 kHz of audio bandwidth only. This standard, which was set in the first half of the 20th century, did not take into account high-pitched voices of women and children, who were consequently difficult to understand on the phone.

At such a narrow bandwidth, it is also difficult to distinguish the consonants f and s, for example. And ambient sounds may even be perceived as noise, affecting moods and the feeling of transparent communication — making long conversations exhausting and not very productive.

The so-called HD Voice services, introduced in the 2000s, then raised audio bandwidth to around 7 kHz, resulting in wideband (WB) transmission. However, while the speech codecs used in HD Voice services provide perceptibly better quality compared to legacy calls, they still transmit just less than half of the fully audible spectrum. Considering the capability of the human auditory system, higher frequencies of 16 kHz and more — relevant for high-fidelity sound — are still missing. In short, most phone services are simply deleting a huge part of the audible spectrum, which decreases speech intelligibility and speaker recognition, and requires additional listening effort in everyday telephony.

When it comes to bit rates, the codecs used in landline telephone services (G.711 and G.722) provide either NB or WB quality and both operate at 64 kbit/s. In contrast, the codecs used for NB and WB services in the mobile world (AMR and AMR-WB) both usually operate at bit rates around 12 kbit/s. Some mobile networks allow even higher rates for AMR-WB (e.g., 23.85 kbit/s), although the quality improvement compared to the default rates is rather limited. These communications codecs are highly optimized for speech signals and, as a consequence, their capability for coding other signals (e.g., music) is inadequate.

Also, signal delays used to lead to involuntary interruptions, impacting natural conversation flow. These delays, also called latencies, should ideally be kept to a minimum of 150 ms to 200 ms end-to-end. The end-to-end delay of a VoIP call is aggregated by several processing steps and components, such as acoustic echo cancellation, noise suppression, automatic gain control, routers, mixing of audio signals in convergence calls, transcoding at network boundaries, jitter buffer, and speech/audio coding. As it is very important to maintain a low total latency, it becomes crucial for every component of the communication chain to use this resource responsibly.

To enable service providers to shake off the limitations of legacy services, HD Voice services were enhanced (e.g., “HDVoice+” for mobile telephony) to include the quality levels of super-wideband (SWB) and full-band (FB). While SWB represents an audio spectrum of 16 kHz, FB contains all frequency components up to 20 kHz. These new services offer an unsurpassed level of quality, resulting in calls that sound as clear as talking to someone in the same room, or listening to high-quality digital audio.

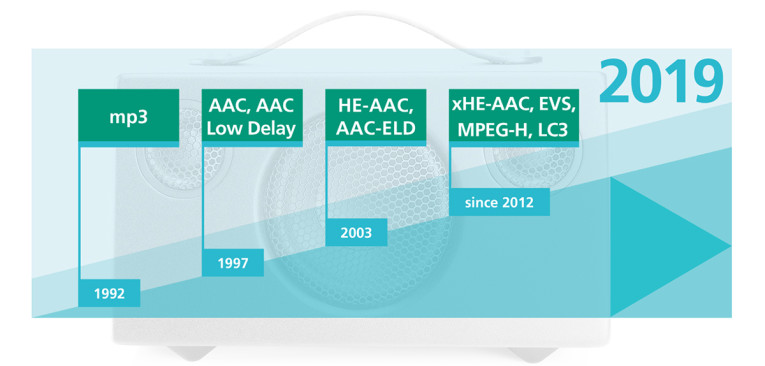

This is achieved by using hybrid solutions uniting the worlds of audio and speech coding. One such hybrid is the latest 3GPP communication codec, Enhanced Voice Services (EVS), specifically designed to improve mobile phone calls in managed networks. Another is the Low Complexity Communication Codec/-Plus (LC3/LC3plus), which brings EVS audio quality to wireless accessories and landline phones. There are also optimized audio codecs such as Enhanced Low Delay AAC (AAC-ELD), tailored for over-the-top (OTT) voice services.

AAC-ELD, EVS, and LC3/LC3plus: Designed for a "Same-Room Feeling"

AAC-ELD is the communication codec that was specifically designed to meet the high demands of all-IP-based video conferencing and telepresence applications in terms of quality and latency. Natively supported in the mobile operating systems iOS and Android, AAC-ELD is based on the highly successful AAC audio codec, which has been in use in the Apple iTunes music store since 2001 and is deployed in billions of devices today.

In contrast to common speech codecs, AAC-ELD extends the application area from clean voice to a broad variety of source material, including speech, singing, music, and ambient sounds. It also delivers high audio quality at a wide range of bit rates (down to 24 kbit/s) and sampling rates up to 48 kHz. It is optimized for a low algorithmic delay, which is essential for natural real-time communication, and can handle mono, stereo, and multi-channel signals — all with latencies as low as 15 ms. Even lower delays of 7.5 ms at a 48 kHz sampling rate can be achieved by the low delay mode.

With the introduction of Long-Term Evolution (LTE), the fourth generation of mobile network standards, cellular phone networks switched to IP-based transmission. LTE is based on the GSM and UMTS standards, offering an all-IP architecture and low latencies. With the communication codec EVS, the international standardization organization for mobile telephony 3GPP and the GSMA have established a highly efficient audio codec solution for VoLTE services.

EVS overcomes three major quality problems in mobile and landline telephone systems: limited audio bandwidth; poor performance on non-speech signals; and, most importantly, poor performance in the case of bad reception and overloaded networks. Combining state-of-the-art speech and audio compression technologies, EVS enables unprecedented audio quality for speech, music, and mixed content. The codec offers a wide range of bit rates from 5.9 kbit/s to 128 kbit/s, allowing service providers to optimize network capacity and call quality as desired for their services.

Bit rates for NB and WB start at 5.9 kbit/s, while SWB audio quality is supported from 9.6 kbit/s on. This significantly improves the audio quality over legacy codecs, also at popular mobile bit rates such as 13.2 kbit/s and 24.4 kbit/s. Combined with the codec’s low algorithmic delay (32 ms), EVS-to-EVS conversations enable users to enjoy a “same-room feeling.”

Another advantage is the codec’s backward compatibility to AMR-WB, which is enabled by an interoperability mode: It allows seamless switching between VoLTE and circuit-switched networks when network conditions warrant a transition.

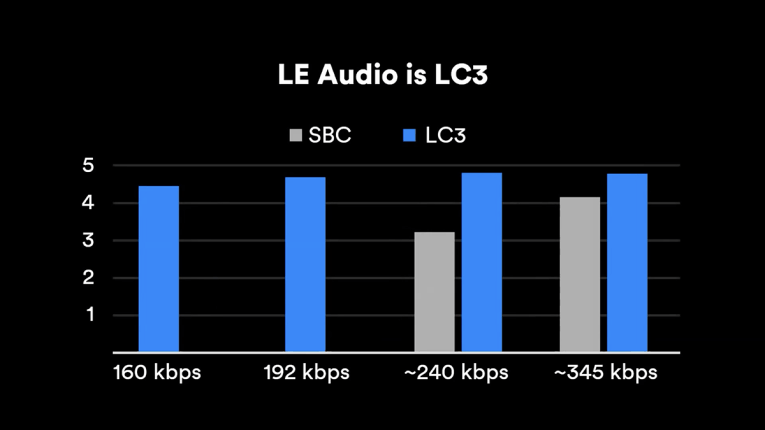

The LC3 codec and its superset LC3plus bring EVS’s SWB audio quality to Bluetooth Low-Energy (BLE) Audio-based accessories, VoIP services, and Digital Enhanced Cordless Technology (DECT) phones, thus enabling users of these devices to share in the feeling that the conversation is really taking place face to face. The codec meets the requirements of wireless communication platforms and accessories in terms of complexity, and it operates at low latency and low memory footprint.

LC3 is currently being standardized by the Bluetooth SIG and will be the audio codec for upcoming BLE profiles. LC3plus was standardized in 2019 as ETSI TS 103 634 and is included in the 2019 DECT standard, enabling DECT phones to deliver not only SWB, but also enhanced WB audio quality. At the same time, it doubles the number of telephones per base station and improves the coverage range at the edge of the cell as well as in environments with poor reception, thanks to its robustness.

Increasing Robustness for Seamless Conversations

Transmission losses can occur in all kinds of network communications. Communication chains consisting of many different technologies are particularly prone to them and as a result, the speech packet loss rates (PLR) will add up and become clearly audible. Thus, it is essential to ensure the robustness of every component in the end-to-end chain.

However, the requirements for error concealment and network adaptation rise with higher-quality audio connections: numerous challenges such as network delay jitter (when packets undergo a variable delay as they traverse the network) or packet loss have to be overcome to achieve a flawless audio conference. Packet loss issues in particular have an unmistakably negative effect on speech intelligibility. State-of-the-art audio codec technology is able to improve the quality and resilience of voice calls via mobile networks, OTT services, and VoIP and DECT devices.

Fraunhofer’s communication audio codecs are equipped with overlapping frames and error concealment, which make it possible to use frame insertion/deletion in order to adjust the playout time to varying network delays. The AAC-ELD codec resolves frame loss, in which the IP frame is discarded by the network, and thus, never delivered to the receiver through loss detection based on Real-time Transport Protocol (RTP) sequence numbers and subsequent error concealment. Based on the overlapping structure of audio frames in AAC-ELD, it is possible to maintain good audio quality despite packet loss.

As for network delay jitter, the recommended method is adaptive playout — offering an algorithm that estimates jitter on the network and adapting the size of the dejitter buffer in order to minimize buffering delay and late loss.

EVS comprises several unique concealment techniques, including a channel-aware mode (CAM) that uses partial redundancy, making this codec robust and enabling it to minimize the audibility of errors and quickly recover from lost packets. EVS’s highly efficient jitter buffer management, which compensates for transmission delay jitter, as well as its minimized inter-frame dependencies, which avoid error propagation and provide for a fast recovery after lost packets, deliver a high-quality communication experience. EVS can tolerate a PLR of 6%, achieving the same quality of its forerunner AMR-WB at 1% PLR.

LC3plus implemented on VoIP or DECT handsets or Bluetooth headsets can minimize disruptions during phone calls, too, as the codec exploits techniques related to EVS and is extremely robust against voice packet loss and bit errors. In overloaded VoIP channels, the redundant transmission of LC3plus voice data verifiably ensures more stable phone calls. For DECT telephones, the LC3plus inherent tools for forward error correction were specially adapted to exploit typical characteristics of DECT links: LC3plus’s channel coding permits the transmission of LC3plus payloads over heavily distorted DECT channels — enabling phone calls without interruption, even if the handset is far away from the base station.

Conclusion

The audio quality of teleconferences should be as high as possible so as to make the conversation feel as natural as having all participants present in the same room. Audio codecs that provide high quality for speech and audio content alike, as well as enhanced robustness, allow service providers and hardware manufacturers to make this “same-room feeling” a reality. Tailored to specific use cases (e.g., phone calls on mobile devices or wireless handsets or accessories, or IP-based teleconferencing applications on a computer or cell phone), such codecs make video conferences more productive, and for instance, let team members sing “Happy Birthday” to colleagues in a crystal clear manner, even during times of social distancing. aX

This article was originally published in audioXpress, October 2020.

About the Author

About the AuthorManfred Lutzky heads the Audio for Communications department at Fraunhofer IIS. He received his Dipl.-Ing degree in electrical engineering from Friedrich Alexander University Erlangen in 1997. Afterward, he joined Fraunhofer IIS, where he was initially responsible for efficient implementations of MP3 and AAC multimedia codecs. Today, he is responsible for the development of state-of-the-art speech and audio communication codecs for mobile networks, such as AAC-ELD, LC3/LC3plus, EVS and IVAS, as well as Voice Quality Enhancement technology for voice-controlled smart devices. He is also participating in ETSI NG-DECT and GSMA NG standardization.